Enums (or tagged unions) whose variants vary in size are prone to significant

memory fragmentation in Rust. That’s because we need to allocate enough

data to accommodate the largest variant.

Figure 1: Consider the following enum:

pub enum Foo {

A(u8),

B(u16),

C(u32),

D(u64),

}

Because of the space needed for tagging and alignment, this type is 16 bytes long.

This presents real pain when collecting a large number of them into a

Vec or HashMap.

The padding can be dealt with using some form of

struct of arrays (SoA)

transformation that stores the tag in a separate allocation.

However, reducing the variant fragmentation is not so trivial.

You could hand-roll specialized data structures for a particular enum

that reduce fragmentation to a minimum; but doing this generically

for an arbitrary enum with maximum memory efficiency is close to

impossible in Rust. The only options we have are proc-macros, which

compose poorly (no #[derive] on third-party code or type aliases)

and are not type aware (unless using workarounds based on

generic_const_expr, which infect the call graph with verbose trait

bounds and don’t work with generic type parameters). Zig on the

other hand let’s us perform the wildest data structure transformations

in a generic and concise way.

Before I go into the implementation details, I’d like to explain why

reducing the aforementioned memory fragmentation is useful in practice.

Background

To me, one of the biggest motivators for efficient enum arrays

has been compilers. One problem that keeps coming up when designing

an AST is figuring out how to reduce its memory footprint. Big ASTs

can incur a hefty performance penalty during compilation, because

memory bandwidth and latency are a frequent bottleneck in compiler

frontends. Chandler Carruth’s

video on the Carbon compiler has been

making the rounds on language forums. In it he describes how a

parsed clang AST regularly consumes 50x more memory than the

original source code!

Alright, so what does this have to do with enums? Well, the

most common way of representing syntax tree nodes is via some kind

of recursive (or recursive-like) data structure. Let’s define a node

for expressions in Rust, using newtype indices for indirection:

enum Expr {

Unit,

Number,

Binary(Operation, ExprId, ExprId),

Ident(Symbol),

Eval(ExprId, ExprSlice),

BlockExpression(ExprId, StatementSlice)

}

Note: We can write an AST node in OCaml for comparison:

type expr = | Unit | Number | Binary of op * expr * expr | Ident of symbol | Eval of expr * stmt list

A big difference compared to Rust is that we can express truly

recursive data types without any form of explicit indirection.

That’s because the runtime system and garbage collector take care

of the memory bookkeeping for us.

The problem we have now is that we want to improve the packing efficiency

of those enums. A simple Vec(Expr) will consume sizeof(Enum)

amount of memory for every element, which corresponds to the size of

the largest variant + tag + padding. Luckily, there are some ways

of dealing with this.

Reducing Fragmentation

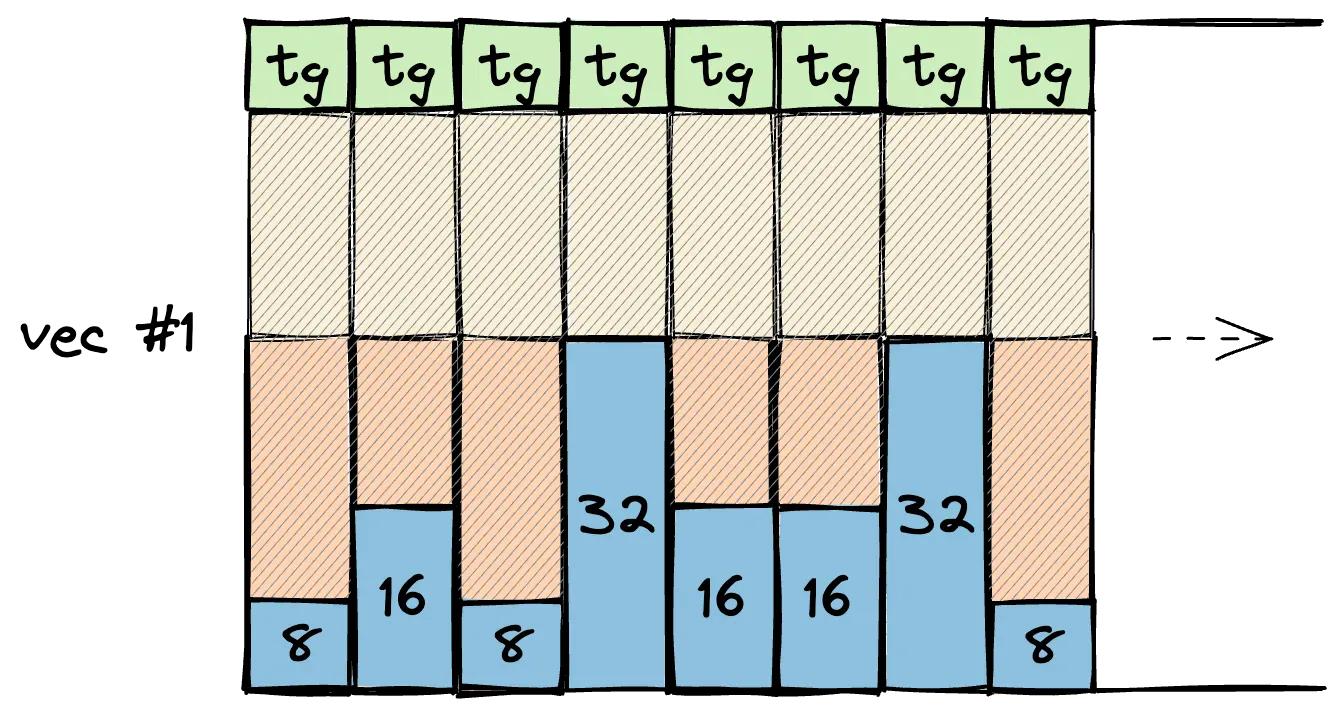

Let’s take a simple example of a 3-variant enum with member sizes

8, 16 and 32 bits. Storing those in a regular Vec will look like this:

Figure 2:

Here every element reserves a large amount of space to accommodate the 32-bit variant and to satisfy its alignment.

The most common way to improve packing efficiency is by just k