If you don’t have time to read this post, these four graphs give most of the argument:

I’m not usually interested in writing simple debunking posts, but I regularly talk and read about the debate around emissions associated with AI and it’s completely clear to me that one side is getting it entirely wrong and spreading misleading ideas. These ideas have become so widespread that I run into them constantly, but I haven’t found a good summary explaining why they’re wrong, so I’m putting one together.

At the last few parties I’ve been to I’ve offhandedly mentioned that I use ChatGPT, and at each one someone I don’t know has said something like “Ew… you use ChatGPT? Don’t you know how terrible that is for the planet? And it just produces slop.” I’ve also seen a lot of popular Twitter posts (many above 100,000 likes) very confidently announcing that it is bad to use AI because it’s burning the planet. Common points made in these conversations and posts are:

-

Each ChatGPT search emits 10 times as much as a Google search.

-

A ChatGPT search uses 500 mL of water.

-

ChatGPT as a whole emits as much as 20,000 US households per day. It uses as much water as 200 Olympic swimming pools’ worth of water each day.

-

Training an AI model emits as much as 200 plane flights from New York to San Francisco.

The one incorrect claim in this list is the 500 mL of water point. It’s a misunderstanding of an original report which said that 500 mL of water are used for every 20-50 ChatGPT searches, not every search. Every other claim in this list is true, but also paints a drastically inaccurate picture of the emissions produced by ChatGPT and other large language models (LLMs) and how they compare to emissions from other activities. These are not minor errors—they fundamentally misunderstand energy use, and they risk distracting the climate movement.

One of the most important shifts in talking about climate has been the collective realization that individual actions like recycling pale in comparison to the urgent need to transition the energy sector to renewables. The current AI debate feels like we’ve forgotten that lesson. After years of progress in addressing systemic issues over personal lifestyle changes, it’s as if everyone suddenly started obsessing over whether the digital clocks in our bedrooms use too much energy and began condemning them as a major problem.

Separately, LLMs have been an unbelievable life improvement for me. I’ve found that most people who haven’t actually played around with them much don’t know how powerful they’ve become or how useful they can be in your everyday life. They’re the first piece of new technology in a long time that I’ve become insistent that absolutely everyone try. If you’re not using them because you’re concerned about the environmental impact, I think that you’ve been misled into missing out on one of the most useful (and scientifically interesting) new pieces of technology in my lifetime. If people in the climate movement stop using them they will lose a lot of potential value and ability to learn quickly. This would be a shame!

On a meta level, there’s a background assumption about how one is supposed to think about climate change that I’ve become exhausted by, and that the AI emissions conversation is awash in. The bad assumption is:

-

To think and behave well about the climate you need to identify a few bad individual actors/institutions and mostly hate them and not use their products. Do not worry about numbers or complex trade-offs or other aspects of your own lifestyle too much. Identify the bad guys and act accordingly.

Climate change is too complex, important, and interesting as a problem to operate using this rule. When people complain to me about AI emissions I usually interpret them as saying “I’m a good person who has done my part and identified a bad guy. If you don’t hate the bad guy too, you’re suspicious.” This is a mind-killing way of thinking. I’m using this post partly to demonstrate how I’d prefer to think about climate instead: we coldly look at the numbers, the institutions, and actors who we can actually collectively influence, and we respond based on where we will actually have the most positive effect on the future, not based on who we happen to be giving status to in the process. I’m not inclined to give status to AI companies. A lot of my job is making people worry more about AI in other areas. What I want is for people to actually react to the realities of climate change. If you’re worried at all about your own use of AI contributing to climate change, you have been tricked into constructing monsters in your head and you need to snap out of it.

Here are some assumptions that will guide the rest of this post:

If you’re not trying to reduce your emissions, you’re not worried about the climate impact of individual LLM use anyway. I’ll assume that you are interested in reducing your emissions and will write about whether LLMs are acceptable to use.

There’s a case to be made that people who care about climate change should spend much less time worrying about how to reduce their individual emissions and much more time thinking about how to bring about systematic change to make our energy systems better (the effects you as an individual can have on our energy system often completely dwarf the effects you can have via your individual consumption choices) but this is a topic for another post.

Our energy system is so reliant on fossil fuels that individuals cannot eliminate all their personal emissions. Immediately stopping all global CO2 emissions would cause billions of deaths. We need to phase out emissions gradually by transitioning to renewables and making trade-offs in energy use. If everyone concerned about climate change adopted a zero-emissions lifestyle today, many of them would die. The rest would lose access to most of modern society, leaving them powerless to influence energy systems. Climate deniers would take over society. Individual zero-emissions living isn’t feasible right now.

The average children’s hospital emits more CO2 per day than the average cruise ship. If we followed the rule “Cut the highest emitters first” we’d prioritize cutting hospitals over cruise ships—which is clearly a bad idea. Reducing emissions requires weighing the value of something against its emissions, not blindly cutting based on CO2 output alone. We should ask questions like “Can we achieve the same outcome with lower emissions?” or “Is this activity necessary?” But the rule “Find the highest emitting thing in a group of activities and cut it” doesn’t work.

In this post, I’ll compare LLM use to other activities and resources of similar usefulness. If you believe LLMs are entirely useless, then we should stop using them—but I’m convinced they are useful. Part of this post will explain why.

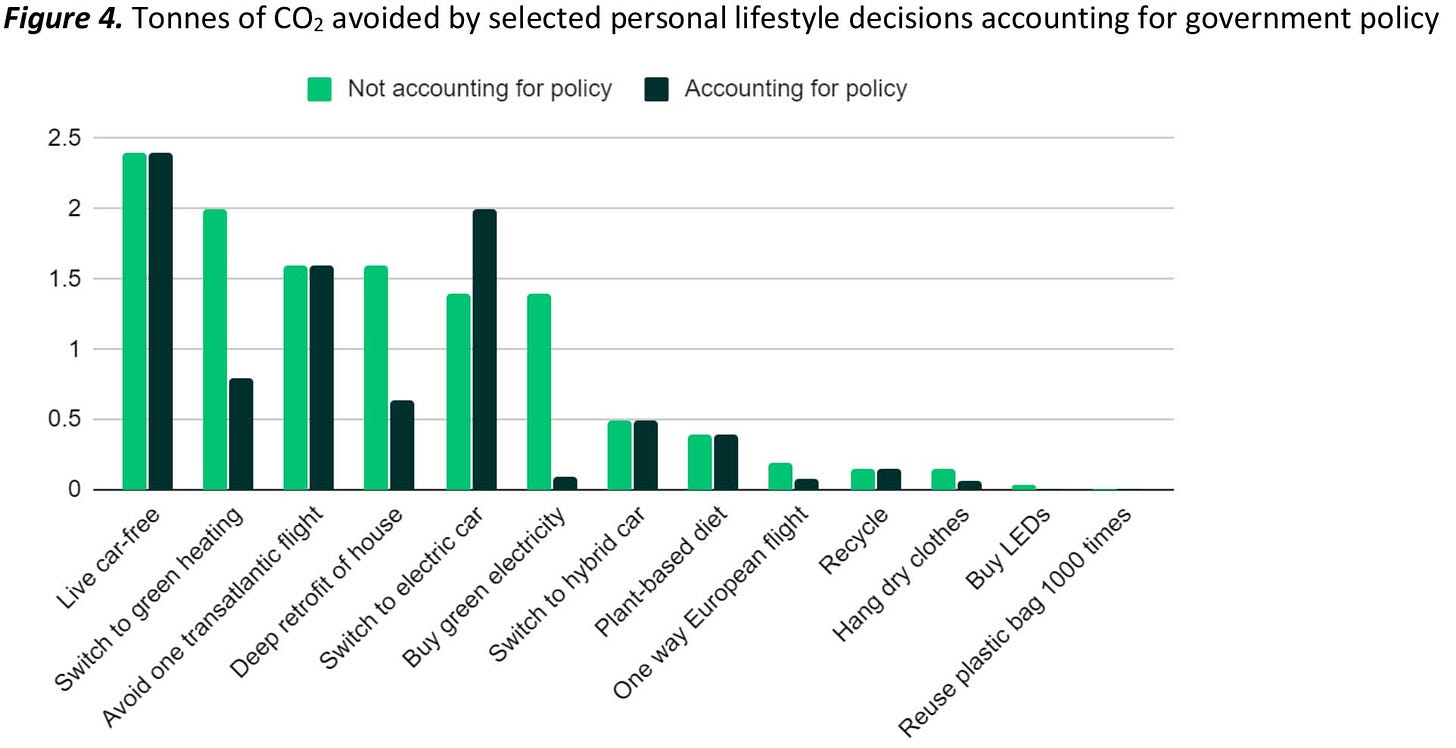

If climate change is an emergency that requires lots of people working collectively to fix in limited time, we cannot afford to get distracted by focusing too much of our effort and thinking on extremely small levels of emissions. The climate movement has seen a lot of progress and success in shifting its focus away from individual actions like turning off lights when leaving a room to big systematic changes like building smart grid infrastructure or funding renewable tech. Even if you are only focused on lifestyle changes, it is best to focus on the most impactful lifestyle changes for climate. It would be much better for climate activists to spend all their time focused on helping people switch to green heating than encouraging people to hang dry their clothes:

If the climate movement should not focus its efforts on getting individual people to hang dry their clothes, it should definitely not focus on convincing people not to use ChatGPT:

Another common concern about LLMs is their water use. This matters even though it’s not a direct cause of climate change. I’ll address that in the second part of the post. There might be other concerns as well (the supply chains involved in constructing data centers in the first place) but from what I can tell other environmental concerns also apply to basically all computers and phones, and I don’t see many people saying that we need to immediately stop using our computers and phones for the sake of the climate. If you think there are other bad environmental results of LLMs that I’m missing in this post, I’d be excited to hear about them in the comments!

Any statistics about the energy consumption of individual internet activities have large error bars, because the internet is so gigantic and the energy use is spread across so many devices. Any source I’ve used has arrived at these numbers by dividing one very large uncertain number by another. I’ve tried my best to report numbers as they exist in public data, but you should assume there are significant error bars in either direction. What matters is the proportions more than the very specific numbers.

If LLMs are not useful at all, any emissions no matter how minute are not worth the trade-off, so we should stop using them. This post depends on LLMs being at least a little useful, so I’m going to make the case here.

I think my best argument for why LLMs are useful is to just have you play around with Claude or ChatGPT and try asking it difficult factual questions you’ve been trying to get answers to. Experiment with the prompts you give it and see if asking very specific questions with requests about how you’d like the answer to be framed (bullet-points, textbook-like paragraph) gets you what you want. Try uploading a complicated text that you’re trying to understand and use the prompt “Can you summarize this and define any terms that would be unfamiliar to a novice in the field?” Try asking it for help with a complicated technical problem you’re dealing with at work.

If you’d like testimonials from other people you can read people’s accounts of how they use LLMs. Here’s a good one. Here’s another. This article is a great introduction to just how much current LLMs can do.

LLMs are not perfect. If they were, the world would be very strange. Human-level intelligence existing on computers would lead to some strange things happening. Google isn’t perfect either, and yet most people get a lot of value out of using it. Receiving bad or incorrect responses from an LLM is to be expected. The technology is attempting to recreate a high level conversation with an expert in any and every domain of human knowledge. We should expect it to occasionally fail.

I personally find LLMs much more useful as a tool for learning than most of what exists on the internet outside of high quality specific articles. Most content on the internet isn’t the Stanford Encyclopedia of Philosophy, or Wikipedia. If I want to understand a new topic, it’s often much more useful for me to read a ChatGPT summary than watch an hour of some of the best YouTube content about it. I can ask very specific clarifying questions about a topic that it would take a long time to dig around the internet to find.

What’s the right way to think about LLM emissions? Something suspicious a lot of claims about LLMs do is compare them to physical real-world objects and their emissions. When talking about global use of ChatGPT, there are a lot of comparisons to cars, planes, and households. Another suspicious move is to compare them to regular online activities that don’t normally come up in conversations about the climate (when was the last time you heard a climate scientist bring up Google searches as a significant cause of CO2 emissions?) The reason this is suspicious is that most people are lacking three key intuitions:

Without these intuitions, it is easy to make any statistic about AI seem like a ridiculous catastrophe. Let’s explore each one.

It is true that a ChatGPT question uses 10x as much energy as a Google search. How much energy is this? A good first question is to ask when the last time was that you heard a climate scientist bring up Google search as a significant source of emissions. If someone told you that they had done 1000 Google searches in a day, would your first thought be that the climate impact must be terrible? Probably not.

The average Google search uses 0.3 Watt-hours (Wh) of energy. The average ChatGPT question uses 3 Wh, so if you choose to use ChatGPT over Google, you are using an additional 2.7 Wh of energy.

How concerned should you be about spending 2.7 Wh? 2.7 Wh is enough to

-

Drive a sedan at a consistent speed for 15 feet

In Washington DC where I live, the household cost of 2.7 Wh is $0.000432.

Sitting down to watch 1 hour of Netflix has the same impact on the climate as asking ChatGPT 300 questions in 1 hour. I suspect that if I announced at a party that I had asked ChatGPT 300 questions in 1 hour I might get accused of hating the Earth, but if I announced that I had watched an hour of Netflix or that I drove 0.8 miles in my sedan the reaction would be a little different. It would be strange if we were having a big national conversation about limiting YouTube watching or never buying books or avoiding uploading more than 30 photos to social media at once or limiting ourselves to 1 email