This post is brought to you by my sponsor, Warp.

This was a timed post. The way these work is that if it takes me more than one hour to complete the post, an applet that I made deletes everything I’ve written so far and I abandon the post. You can find my previous timed post here. I placed the advertisement before I started my writing timer.

The authors of scientific papers often say one thing and find another; they concoct a story around a set of findings that they might not have even made, or which they might have actually even contradicted. This happens surprisingly often, and it’s a very serious issue for a few reasons. For example:

-

Most people don’t read papers at all, so what they might hear is polluted second-hand information, and if they do read papers, they usually just read titles and abstracts, and those are frequently wrong.

-

People trust scientists and scientific institutions, and they should be able to trust scientists and scientific institutions. If that trust is broken, an important institution can lose a lot of its power for doing good.

-

People trust peer reviewed works, and they should able to trust in the power of peer reviewed works. If that trust is broken (and it should currently be), a potentially important tool loses a lot of its power for doing good.

-

Scientific institutions trust scientists, and they allocate funding based on the things scientists tell them. assuming they’re trustworthy, so when that trust is violated, they’re liable to misallocate funding and research effort.

-

And the list goes on…

At times, this violation of trust is so egregious that it’s easy to wonder how it could have become publicly known in the first place. Ideally, outright lying is something that gets caught in peer review, or which people are just too ashamed to do. But though you might think better of scientists than so many of them have proven to be, plenty of them do still brazenly lie.

Lying in scientific papers happens all the time.

For example, last year I dissected a paper that several newspapers had reported on which alleged that physically high ceilings in test-taking locations made students score worse on their exams. This paper’s abstract, title, text, and the public remarks to journalists from the authors all implied that was what they found, but their actual result—correctly shown in a table in the paper and reproducible from their code and data—was the complete opposite: higher ceilings were associated with higher test scores! Making a viral hubbub about this managed to get the paper retracted—eventually—but the retraction notice barely mentioned any of the paper’s problems and, instead, said that whatever issues warranted retraction were examples of good ole “honest error”.

Usually when a paper contains lies, they’re hidden a bit better than that. Consider the concept of an Everest Regression. We know Mount Everest is very cold, but a clever person can argue that it’s not cold, once you control for its high altitude. The high altitude of Mount Everest is why it’s cold, but that doesn’t matter. To the researcher claiming Mount Everest is actually warm, all that matters is the result of their ‘analysis’, where they’ve ‘controlled’ for a variable that is, incidentally, the key explanatory variable in this case. This can also be extended so, for example, McDonald’s is equivalent to a Michelin 3-Star restaurant after controlling for the quality of ingredients, preparation, ambience, and price.

If the responsible researcher is ignorant of this issue, they’re not lying, they’re just mistaken. But if they’re aware of what the problem is and they are nonetheless insistent that Mount Everest is actually warm without qualification, then they’re lying. As it happens, plenty of researchers lie in just such a way. Any economist worth their salt will not find themselves conducting an Everest Regression, and yet, when it’s convenient, they’ll use them if they want to persuade people.

A pair of researchers published a paper on immigration and crime in Germany days before the recent election. Their conclusion? More foreigners does not increase the crime rate.

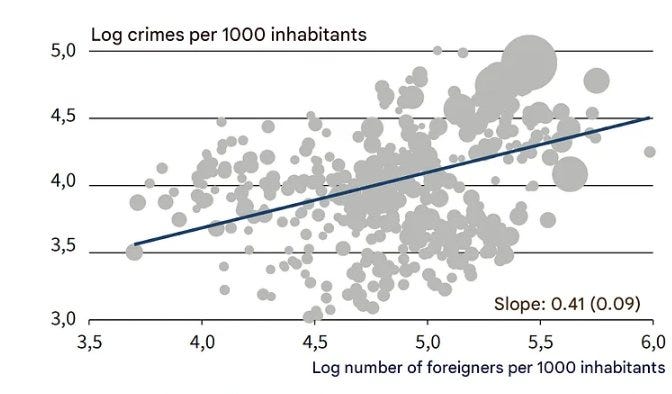

Immigrants in Germany have a vastly elevated crime rate compared to natives, and this is true across the board, from theft and fraud to rapes and sexual violence to homicide and other violent crimes. Consistent with this, the paper reports that the higher the proportion of foreigners in a district or region (pictured below), the higher its crime rate:

But, after controlling for differences in age, sex, suspect rate, unemployment, and taking out some fixed effects, immigration at the district or region level is no longer related to crime. But wait! Why did they control for the suspect rate? That is, in effect, controlling for the dependent variable to then say that foreign-born population shares are unrelated to crime. But if they drive differences in crime across different parts of Germany, this is just controlling for altitude and declaring Everest is hot, stating that stadiums make people run fast!

Ignoring, for a moment, that the authors used a very inappropriate control that trivializes their results, they were not equipped to produce a causally-informative result in the first place. What their paper entails doing is, instead, proposing a strong ecological hypothesis, where differences between regions drive differences in criminality, and foreigners happen to be situated in places with high crime due to some sort of sorting mechanism. They then sought to show that there’s some data that can fit with this hypothesis. What they did is, thus, arguably, appropriate, but to believe that, you have to believe the model, and the evidence from outside of the exercise they presented fits exceptionally poorly with their proposed model.

In order of increasing relevance and debunky-ness, consider these results:

-

Using individual-level data, immigrants in Sweden remain more likely to commit rapes even after controlling for much better measures of socioeconomic status, like individual welfare receipt, income, and neighborhood-level deprivation, and even for prior criminal behavior and all of alcohol use disorder, drug use disorder, and having any psychiatric disorder. Because this is individual-level rather than district or region level data, it should be considered more convincing than the ecological exercise from the German crime paper, but if one believes a strong ecological model, it may not be.

-

Ecological models of the determination of crime are not supported by the large, causally informative studies that have been done on them. Ecological models have not held up well at all, and what remains of ecological hypotheses is, at best, very weak effects which are nowhere near sufficient to explain immigrant overrepresentation in crime in Germany.

-

Causally-informative evidence from Germany that exploits administrative refugee assignments to estimate immigrants’ impacts on crime shows that they do, in fact, increase crime. But the crime-producing effect of refugee settlement is lagged, meaning it doesn’t happen instantaneously. This effectively adds insult to injury, because by its nature, a lagged effect adds evidence that the cross-sectional ecological model is inappropriate.

The authors of this paper produced a compromised analysis that doesn’t hold up and they made it painfully obvious that their findings were politically motivated. Quoting them:

This finding fuels the concern that migration could endanger security due to a higher tendency for criminality among foreigners. Security concerns are a central argument in the current election campaign for limiting immigration. For example, Bavarian Prime Minister Markus Söder stressed at the CSU winter retreat that migration must be “limited and thus internal security improved”. The CDU/CSU’s candidate for chancellor, Friedrich Merz, believes that this is “bringing problems into the country” and calls for the revocation of German citizenship for criminal citizens who have a second nationality.

This paper was written less as part of a truth-seeking effort, and more to accomplish a political goal, to provide evidence that one of the biggest issues to voters in a forthcoming election wasn’t really much of an issue at all. This objective and the obviousness of the issue to anyone with a modicum of competence makes this paper a pretty clear example of scientists lying and engaging in an attempt at gaslighting.

But there’s worse out there. As I’ll show, scientists often lie with far more brazenness.

Steve Jobs is quoted as saying, “Design is not just what it looks like and feels like. Design is how it works.” Few B2B SaaS companies take this as seriously as Warp, the payroll and compliance platform used by based founders and startups.

Warp has cut out all the features you don’t need (looking at you, Gusto’s “e-Welcome” card for new employees) and has automated the ones you do: federal, state, and local tax compliance, global contractor payments, and 5-minute onboarding.

In other words, Warp saves you enough time that you’ll stop having the intrusive thoughts suggesting you might actually need to make your first Human Resources hire.

Get started now at joinwarp.com/crem and get a $1,000 Amazon gift card when you run payroll for the first time.

Get Started Now: www.joinwarp.com/crem

In 1999, Pitkin, Branagan and Burmeister analyzed random samples of articles and their abstracts published in Annals of Internal Medicine, BMJ, JAMA, Lancet, NEJM, and CMAJ, several top-tier medical journals. They found that large portions of the papers published in those journals had reporting inconsistencies between what was in the abstract and what was in article bodies.

In Pitkin, Branagan and Burmeister’s study, “inconsistency” refers to an abstract and a paper’s body presenting statistics that do not agree with one another for reasons not due to chance, like rounding. For example, if my abstract says “We found 1+1=2” and my article says “We did not find that 1+1=2”, that’s inconsistent. They also looked at whether abstracts included omissions, which were defined as times when statistics were presented in abstracts and then never shown in the bodies of papers.

For one journal, the majority of papers (68%) had one or both or these errors; for all journals, the number with such issues was always sizable:

Two of the authors of this research came back later and evaluated a randomized controlled trial that was intended to assess if the field could solve the problem by identifying specific errors authors made, during the review process. Despite the identification, few authors corrected their mistakes, and the interven