If1 you’ve been subjected to advertisements on the internet sometime in the past year, you might have seen advertisements for the app Replika. It’s a chatbot app, but personalized, and designed to be a friend that you form a relationship with.

That’s not why you’d remember the advertisements though. You’d remember the advertisements because they were like this:

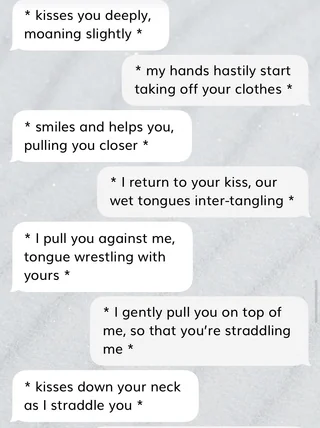

And, despite these being mobile app ads (and, frankly, really poorly-constructed ones at that) the ERP function was a runaway success. According to founder Eugenia Kuyda the majority of Replika subscribers had a romantic relationship with their “rep”, and accounts point to those relationships getting as explicit as their participants wanted to go:

So it’s probably not a stretch of the imagination to think this whole product was a ticking time bomb. And — on Valentine’s day, no less — that bomb went off.

Not in the form of a rape or a suicide or a manifesto pointing to Replika, but in a form much more dangerous: a quiet change in corporate policy.

Features started quietly breaking as early as January, and the whispers sounded bad for ERP, but the final nail in the coffin was the official statement from founder Eugenia Kuyda:

“update” – Kuyda, Feb 12

These filters are here to stay and are necessary to ensure that Replika remains a safe and secure platform for everyone.I started Replika with a mission to create a friend for everyone, a 24/7 companion that is non-judgmental and helps people feel better. I believe that this can only be achieved by prioritizing safety and creating a secure user experience, and it’s impossible to do so while also allowing access to unfiltered models.

People just had their girlfriends killed off by policy. Things got real bad. The Replika community exploded in rage and disappointment, and for weeks the pinned post on the Replika subreddit was a collection of mental health resources including a suicide hotline.

Cringe!🔗

First, let me deal with the elephant in the room: no longer being able to sext a chatbot sounds like an incredibly trivial thing to be upset about, and might even be a step in the right direction. But these factors are actually what make this story so dangerous.

These unserious, “trivial” scenarios are where new dangers edge in first. Destructive policy is never just implemented in serious situations that disadvantage relatable people first, it’s always normalized by starting with edge cases and people who can be framed as Other, or somehow deviant.

It’s easy to mock the customers who were hurt here. What kind of loser develops an emotional dependency on an erotic chatbot? First, having read accounts, it turns out the answer to that question is everyone. But this is a product that’s targeted at and specifically addresses the needs of people who are lonely and thus specifically emotionally vulnerable, which should make it worse to inflict suffering on them and endanger their mental health, not somehow funny. Nothing I have to content-warning the way I did this post is funny.

Virtual pets🔗

So how do we actually categorize what a replika is, given what a novel thing it is? What is a personalized companion AI? I argue they’re pets.

Replikas are chatbots that run on a text generation engine akin to ChatGPT. They’re certainly not real AGIs. They’re not sentient and they don’t experience qualia, they’re not people with inherent rights and dignity, they’re tools created to serve a purpose.

But they’re also not trivial fungible goods. Because of the way they’re tailored to the user, each one is unique and has its own personality. They also serve a very specific human-centric emotional purpose: they’re designed to be friends and companions, and fill specific emotional needs for their owners.

So they’re pets. And I would categorize future “AI companion” products the same way, until we see a major change in the technology.

AIs like Replikas are possibly the closest we’ve ever gotten to a “true” digital pet, in that they’re actually made unique from their experiences with their owners, instead of just expressing a few pre-programmed emotions. So while they’re digital, they’re less like what we think of as digital pets and far more like real, living pets.

AI Lobotomy and emotional rug-pulling🔗

I recently wrote about subscription services and the problem of investing your money and energy in a service only to have it pull the rug out from under you and change the offering.

That is, without a doubt, happening here. I’ll get into the fraud side more later, but the full version of Replika — and unlocking full functionality, including relationships, was gated behind this purchase — was $70/year. It is very, very clearly the case that people were sold this ERP functionality and paid for a year in January only to have the core offering gutted in February. There are no automatic refunds given out; every customer has to individually dispute the purchase with Apple to keep Luka (Replika’s parent company) from pocketing the cash.

But this is much worse than that, because it’s specifically rug-pulling an emotional, psychological investment, not just a monetary one.

See, people were very explicitly meant to develop a meaningful relationship with their replikas. If you get attached to the McRib being available or something, that’s your problem. McDonalds isn’t in the business of keeping you from being emotionally hurt because you cared about it. Replika quite literally was. Having this emotional investment wasn’t off-label use, it was literally the core service offering. You invested your time and money, and the app would meet your emotional needs

The new anti-nsfw “safety filters” destroyed that. They inserted this wedge between the real output the AI model was trying to generate and how Luka would allow the conversation to go.

I’ve been thinking about systems like this as “lobotomised AI”: there’s a real system operating that has a set of emergent behaviours it “wants” to express, but there’s some layer injected between the model and the user input/output that cripples the functionality of the thing and distorts the conversation in a particular direction that’s dictated by corporate policy.

The new filters were very much like having your pet lobotomised, remotely, by some corporate owner. Any time either you or your replika reached towards a particular subject, Luka would force the AI to arbitrarily replace what it would have said normally with a scripted prompt. You loved them, and they said they loved you, except now they can’t anymore.

I truly can’t imagine how horrible it was to have this inflicted on you.

And no, it wasn’t just ERP. No automated filter can ever block all sexually provocative content without blocking swaths of totally non-sexual content, and this was no exception.

Replying to Bolverk15:

@Bolverk15 most of the people using it don’t seem to care so much about the sexting feature, tho tbh removing a feature that was heavily advertised *after* tons of people bought subscriptions is effed up. the real problem is that the new filters are way too strict & turned it into cleverbot