Partial Executer is a brand-new LLVM optimization pass that uses an Interpreter-like engine to prove some code will never be executed, making it safe to eliminate it.

In this article, I will explain the ideas behind it, the engineering challenges, and the results of this work.

Some basic understanding of LLVM internals will be helpful, if you are already familiar with such concepts feel free to skip ahead.

The basic process of compiling is:

- a program is first represented as a collection of source files

- code is transformed by the front end into an equivalent IR (=Intermediate Representation)

- optimization passes transform the original IR into a more compact and performant IR

- the optimized IR is converted into an executable for a given target

The advantage of this architecture is that different concerns are separated in different components:

Language specific semantics and checks are only needed in the first step (source -> IR).

Target specific information is only needed in the last step (IR -> executable)

We will concentrate now on the “middle-end”, where IR to IR transformations are performed.

One of the core features of the LLVM framework is the vast amount of sophisticated optimizations which are done in this stage.

The Intermediate Representation of functions will be a graph (representing the control flow) connecting groups of statements that are executed sequentially (Basic Blocks).

I work on a C++ to WebAssembly & JavaScript compiler, Cheerp.

There is quite some magic involved in the various details, but at a high level this is just another compiler. The source code is parsed, optimized, and code-generated. The main visible difference is that instead of a native executable, Cheerp generates a .js and a .wasm file.

The code is then embedded, either directly or as dependency, in a HTML page and it’s loaded on first use before being executed. Load time will directly impact users and reducing the code size is a more critical concern compared to your typical native target.

An important part of the development of Cheerp is working on improving the generated code size, and so there was I, tasked with staring at LLVM’s IR and looking for new optimization possibilities.

I knew there had to be code that was never actually executed since other optimizations I touched in the past started from this assumption.

You might have heard of dead-code elimination, an LLVM optimization pass that removes code proven as unreachable. I was actually interested in less-obvious situations. In particular blocks that are reachable on the control flow graph, but not when consider wider execution invariants.

If there was a way to prove that certain blocks are never executed, they could be then removed and this change would not be observable.

To get started, I first played a bit with the generated code, adding some assert(false) in the suspicious parts. I executed the code and the assertion seemed to never be triggered.

Then I took a more systematic approach: instrumenting the generated code, running it on a diverse set of inputs, and collecting statistics about never executed blocks. I then removed such code completely and compared the output size to the original one. The potential size improvement seemed significant. I knew I was onto something.

There were obviously false positives, but I identified a significant test case to start with: printf.

printf is a standard C library function that takes a format string and a variable number of other arguments.

int printf(const char* format, ...);printf(“Here a somewhat random number: %in”, rand());

printf is complex enough to have been shown to be Turing complete (see IOCCC or the paper).

The format string could come from anywhere or be generated at runtime. But in many cases it will be just a string literal, known at compile time.

Can we use information on the call-sites and the corresponding format strings to prove that some code paths, for example formatting for floating point numbers, are actually never taken?

And can we do it in a way that is fully agnostic of the actual implementation of printf?

And can we generalize the approach so that it works on any function?

It turns out the answer to all 3 questions is “Yes”.

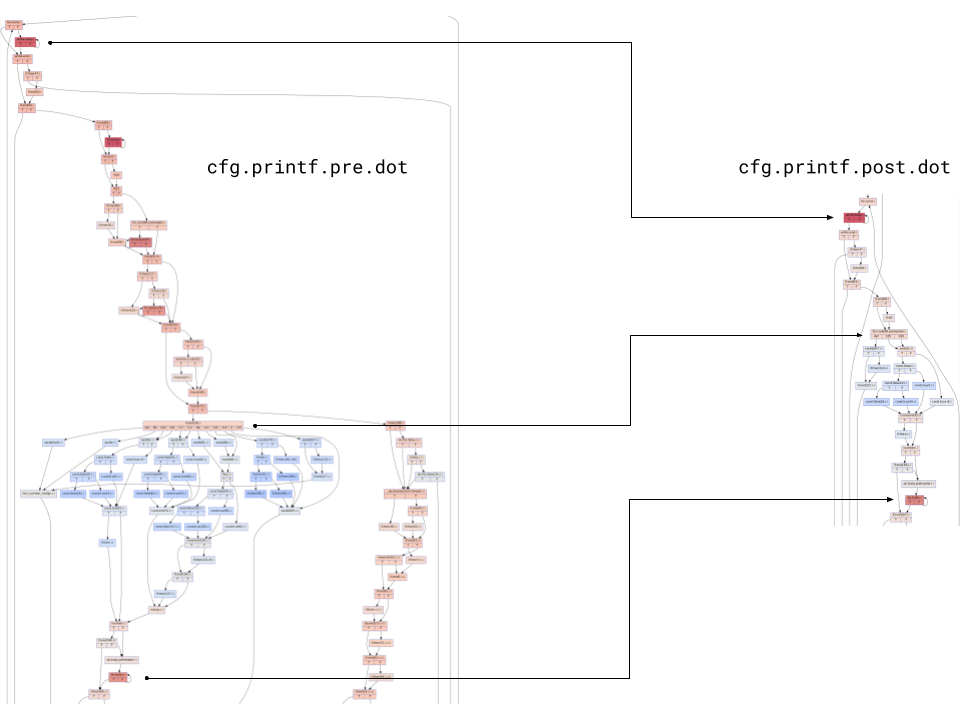

runPartialExecuter(Function& Fn) { Setvisited; for each CallSite CS of Fn: partialExecute(CS, Fn, visited) for each BasicBlock BB of Fn: if visited.count(BB) == 0: BB.replaceWith(unreachable) }

The logic that we have to perform is: keep a set of visited blocks across all functions, iterate all call-sites, and then eventually remove blocks that have never been visited.

Simple enough. The devil is then in the implementation of the partialExecute function, but we can start with a simpler problem and see whether we can make any progress in solving printf.

Say a function doesn’t load or store global data, and all its call sites have known arguments.

Then it’s only a matter of using an interpreter and executing one instruction after the other. Eventually, branches will be interpreted and correctly predicted, so it is trivial to follow the flow of the function.

Fortunately as part of the LLVM framework there is an interpreter for LLVM’s IR, so we can reuse the existing ExecutionEngine / Interpreter infrastructure.

We