Ever wondered how AI agents actually work behind the scenes? This guide breaks down how agent systems are built as simple graphs – explained in the most beginner-friendly way possible!

Note: This is a super-friendly, step-by-step version of the official PocketFlow Agent documentation. We’ve expanded all the concepts with examples and simplified explanations to make them easier to understand.

Have you been hearing about “LLM agents” everywhere but feel lost in all the technical jargon? You’re not alone! While companies are racing to build increasingly complex AI agents like GitHub Copilot, PerplexityAI, and AutoGPT, most explanations make them sound like rocket science.

Good news: they’re not. In this beginner-friendly guide, you’ll learn:

-

The surprisingly simple concept behind all AI agents

-

How agents actually make decisions (in plain English!)

-

How to build your own simple agent with just a few lines of code

-

Why most frameworks overcomplicate what’s actually happening

In this tutorial, we’ll use PocketFlow – a tiny 100-line framework that strips away all the complexity to show you how agents really work under the hood. Unlike other frameworks that hide the important details, PocketFlow lets you see the entire system at once.

Most agent frameworks hide what’s really happening behind complex abstractions that look impressive but confuse beginners. PocketFlow takes a different approach – it’s just 100 lines of code that lets you see exactly how agents work!

Benefits for beginners:

-

Crystal clear: No mysterious black boxes or complex abstractions

-

See everything: The entire framework fits in one readable file

-

Learn fundamentals: Perfect for understanding how agents really operate

-

No baggage: No massive dependencies or vendor lock-in

Instead of trying to understand a gigantic framework with thousands of files, PocketFlow gives you the fundamentals so you can build your own understanding from the ground up.

Imagine our agent system like a kitchen:

-

Nodes are like different cooking stations (chopping station, cooking station, plating station)

-

Flow is like the recipe that tells you which station to go to next

-

Shared store is like the big countertop where everyone can see and use the ingredients

In our kitchen (agent system):

-

Each station (Node) has three simple jobs:

-

Prep: Grab what you need from the countertop (like getting ingredients)

-

Exec: Do your special job (like cooking the ingredients)

-

Post: Put your results back on the countertop and tell everyone where to go next (like serving the dish and deciding what to make next)

-

-

The recipe (Flow) just tells you which station to visit based on decisions:

-

“If the vegetables are chopped, go to the cooking station”

-

“If the meal is cooked, go to the plating station”

-

Let’s see how this works with our research helper!

An LLM (Large Language Model) agent is basically a smart assistant (like ChatGPT but with the ability to take actions) that can:

-

Think about what to do next

-

Choose from a menu of actions

-

Actually do something in the real world

-

See what happened

-

Think again…

Think of it like having a personal assistant managing your tasks:

-

They review your inbox and calendar to understand the situation

-

They decide what needs attention first (reply to urgent email? schedule a meeting?)

-

They take action (draft a response, book a conference room)

-

They observe the result (did someone reply? was the room available?)

-

They plan the next task based on what happened

Here’s the mind-blowing truth about agents that frameworks overcomplicate:

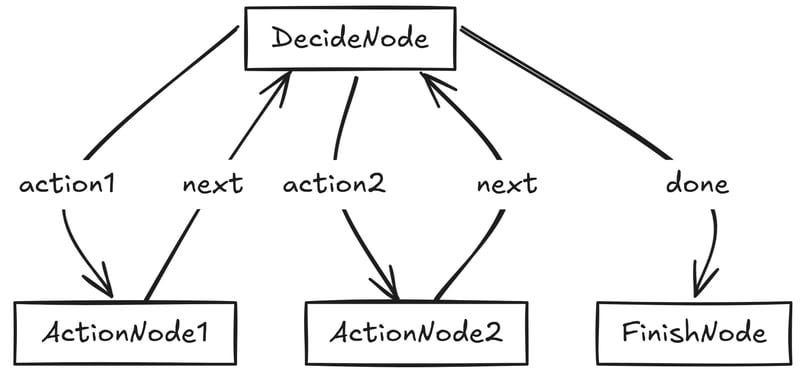

That’s it! Every agent is just a graph with:

-

A decision node that branches to different actions

-

Action nodes that do specific tasks

-

A finish node that ends the process

-

Edges that connect everything together

-

Loops that bring execution back to the decision node

No complex math, no mysterious algorithms – just nodes and arrows! Everything else is just details. If you dig deeper, you’ll uncover these hidden graphs in overcomplicated frameworks:

-

OpenAI Agents: run.py#L119 for a workflow in graph.

-

Pydantic Agents: _agent_graph.py#L779 organizes steps in a graph.

-

Langchain: agent_iterator.py#L174 demonstrates the loop structure.

-

LangGraph: agent.py#L56 for a graph-based approach.

Let’s see how the graph actually works with a simple example.

Imagine we want to build an AI assistant that can search the web and answer questions – similar to tools like Perplexity AI, but much simpler. We want our agent to be able to:

-

Read a question from a user

-

Decide if it needs to search for information

-

Look things up on the web if needed

-

Provide an answer once it has enough information

Let’s break down our agent into individual “stations” that each handle one specific job. Think of these stations like workers on an assembly line – each with their own specific task.

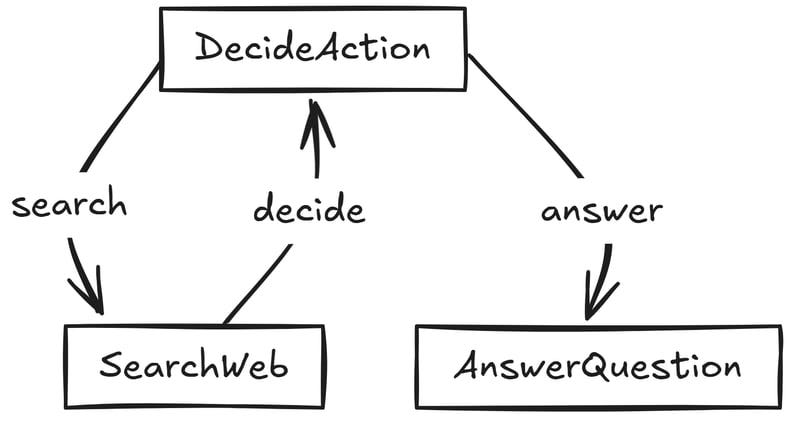

Here’s a simple diagram of our research agent:

In this diagram:

-

DecideAction is our “thinking station” where the agent decides what to do next

-

SearchWeb is our “research station” where the agent looks up information

-

AnswerQuestion is our “response station” where the agent creates the final answer

Imagine you asked our agent: “Who won the 2023 Super Bowl?”

Here’s what would happen step-by-step:

-

DecideAction station:

-

LOOKS AT: Your question and what we know so far (nothing yet)

-

THINKS: “I don’t know who won the 2023 Super Bowl, I need to search”

-

DECIDES: Search for “2023 Super Bowl winner”

-

PASSES TO: SearchWeb station

-

-

SearchWeb station:

-

LOOKS AT: The search query “2023 Super Bowl winner”

-

DOES: Searches the internet (imagine it finds “The Kansas City Chiefs won”)

-

SAVES: The search results to our shared countertop

-

PASSES TO: Back to DecideAction station

-

-

DecideAction station (second time):

-

LOOKS AT: Your question and what we know now (search results)

-

THINKS: “Great, now I know the Chiefs won the 2023 Super Bowl”

-

DECIDES: We have enough info to answer

-

PASSES TO: AnswerQuestion station

-

-

AnswerQuestion station:

-

LOOKS AT: Your question and all our research

-

DOES: Creates a friendly answer using all the information

-

SAVES: The final answer

-

FINISHES: The task is complete!

-

This is exactly what our code will do – just expressed in programming language.

Let’s build each of these stations one by one and then connect them together!

The DecideAction node is like the “brain” of our agent. Its job is simple:

-

Look at the question and any information we’ve gathered so far

-

Decide whether we need to search for more information or if we can answer now

Let’s build it step by step:

class DecideAction(Node): def prep(self, shared): # Think of "shared" as a big notebook that everyone can read and write in # It's where we store everything our agent knows # Look for any previous research we've done (if we haven't searched yet, just note that) context = shared.get("context", "No previous search") # Get the question we're trying to answer question = shared["question"] # Return both pieces of information for the next step return question, context

First, we created a prep method that gathers information. Think of prep like a chef gathering ingredients before cooking. All it does is look at what we already know (our “context”) and what question we’re trying to answer.

Now, let’s build the “thinking” part:

def exec(self, inputs): # This is where the magic happens - the LLM "thinks" about what to do question, context = inputs # We ask the LLM to decide what to do ne

8 Comments

zh2408

Hey folks! I just posted a quick tutorial explaining how LLM agents (like OpenAI Agents, Pydantic AI, Manus AI, AutoGPT or PerplexityAI) are basically small graphs with loops and branches. For example:

OpenAI Agents: for the workflow logic: https://github.com/openai/openai-agents-python/blob/48ff99bb…

Pydantic Agents: organizes steps in a graph: https://github.com/pydantic/pydantic-ai/blob/4c0f384a0626299…

Langchain: demonstrates the loop structure: https://github.com/langchain-ai/langchain/blob/4d1d726e61ed5…

If all the hype has been confusing, this guide shows how they actually work under the hood, with simple examples. Check it out!

https://zacharyhuang.substack.com/p/llm-agent-internal-as-a-…

mentalgear

Everything that was previously just called automation or pipeline processing on-top of LLM is now the buzzword "agents". The hype bubble needs constant feeding to keep from imploding.

campbel

I follow Mr. Huang, read/watch his content and also plan to use PocketFlow in some cases. A preamble, because I don't agree with this assessment. I think agents as nodes in a DAG workflow is _an_ implementation of an agentic system, but is not the systems I most often interact with (e.g. Cursor, Claude + MCP).

Agentic systems can be simply the LLM + prompting + tools[1]. LLMs are more than capable (especially chain-of thought models) to breakdown problems into steps, analyze necessary tools to use and then executing the steps in sequence. All of this is done with the model in the driver seat.

I think the system described in the post need a different name. It's a traditional workflow system with an agent operating on individual tasks. Its more rigid in that the workflow is setup ahead of time. Typical agentic systems are largely undefined or defined via prompting. For some use cases this rigidity is a feature.

[1 https://docs.anthropic.com/en/docs/build-with-claude/tool-us…

_pdp_

It is hard to put a pin on this one because there are so many thing wrong with this definition. There are agent frameworks that are not rebranded workflow tools too. I don't think this article helps explain anything except putting the intended audience in the same box of mind we were stuck since the invention of programming – i.e. it does not help.

Forget about boxes and deterministic control and start thinking of error tolerance and recovery. That is what agents are all about.

miguelinho

Great write up! In my opinion, your description likely accurately models what AI agents are doing. Perhaps the graph could be static or dynamic. Either way – it makes sense! Also, thank you for removing the hype!

jumploops

Anthropic[0] and Google[1] are both pushing for a clear definition of an “agent” vs. an “agentic workflow”

tl;dr from Anthropic:

> Workflows are systems where LLMs and tools are orchestrated through predefined code paths.

> Agents, on the other hand, are systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks.

Most “agents” today fall into the workflow category.

The foundation model makers are pushing their new models to be better at the second, “pure” agent, approach.

In practice, I’m not sure how effective the “pure” approach will work for most LLM-assisted tasks.

I liken it to a fresh intern who shows up with amnesia every day.

Even if you tell them what they did yesterday, they’re still liable to take a different path for today’s work.

My hunch is that we’ll see an evolution of this terminology, and agents of the future will still have some “guiderails” (note: not necessarily _guard_rails), that makes their behavior more predictable over long horizons.

[0]https://www.anthropic.com/engineering/building-effective-age…

[1]https://www.youtube.com/watch?v=Qd6anWv0mv0

bckr

Anyone succeeding with agents in production? Other than cursor :)

DrFalkyn

I think the model he is looking for is a deterministic finite automata (DFA)