Comment Permalink

I think this is missing a major piece of the self-play scaling paradigm, one which has been weirdly absent in most discussions of o1 as well: much of the point of a model like o1 is not to deploy it, but to generate training data for the next model. It was cool that o1’s accuracy scaled with the number of tokens it generated, but it was even cooler that it was successfully bootstrapping from 4o to o1-preview (which allowed o1-mini) to o1-pro to o3 to…

EDIT: given the absurd response to this comment, I’d point out that I do not think OA has achieved AGI and I don’t think they are about to achieve it either. I am trying to figure out what they think.

Every problem that an o1 solves is now a training data point for an o3 (eg. any o1 session which finally stumbles into the right answer can be refined to drop the dead ends and produce a clean transcript to train a more refined intuition). As Noam Brown likes to point out, the scaling laws imply that if you can search effectively with a NN for even a relatively short time, you can get performance on par with a model hundreds or thousands of times larger; and wouldn’t it be nice to be able to train on data generated by an advanced model from the future? Sounds like good training data to have!

This means that the scaling paradigm here may wind up looking a lot like the current train-time paradigm: lots of big datacenters laboring to train a final frontier model of the highest intelligence, which will usually be used in a low-search way and be turned into smaller cheaper models for the use-cases where low/no-search is still overkill. Inside those big datacenters, the workload may be almost entirely search-related (as the actual finetuning is so cheap and easy compared to the rollouts), but that doesn’t matter to everyone else; as before, what you see is basically, high-end GPUs & megawatts of electricity go in, you wait for 3-6 months, a smarter AI comes out.

I am actually mildly surprised OA has bothered to deploy o1-pro at all, instead of keeping it private and investing the compute into more bootstrapping of o3 training etc. (This is apparently what happened with Anthropic and Claude-3.6-opus – it didn’t ‘fail’, they just chose to keep it private and distill it down into a small cheap but strangely smart Claude-3.6-sonnet. And did you ever wonder what happened with the largest Gemini models or where those incredibly cheap, low latency, Flash models come from…?‡ Perhaps it just takes more patience than most people have.) EDIT: It’s not like it gets them much training data: all ‘business users’ (who I assume would be the majority of o1-pro use) is specifically exempted from training unless you opt-in, and it’s unclear to me if o1-pro sessions are trained on at all (it’s a ‘ChatGPT Pro’ level, and I can’t quickly find whether a professional plan is considered ‘business’). Further, the pricing of DeepSeek’s r1 series at something like a twentieth the cost of o1 shows how much room there is for cost-cutting and why you might not want to ship your biggest best model at all compared to distilling down to a small cheap model.

If you’re wondering why OAers† are suddenly weirdly, almost euphorically, optimistic on Twitter and elsewhere and making a lot of haha-only-serious jokes (EDIT: to be a little more precise, I’m thinking of Altman, roon, Brown, Sutskever, several others like Will Bryk or Miles Brundage’s “Time’s Up”, Apples, personal communications, and what I think are echoes in other labs’ people, and not ‘idontexist_nn’/’RileyRalmuto’/’iruletheworldmo’ or the later Axios report that “Several OpenAI staff have been telling friends they are both jazzed & spooked by recent progress.”), watching the improvement from the original 4o model to o3 (and wherever it is now!) may be why. It’s like watching the AlphaGo Elo curves: it just keeps going up… and up… and up…

There may be a sense that they’ve ‘broken out’, and have finally crossed the last threshold of criticality, from merely cutting-edge AI work which everyone else will replicate in a few years, to takeoff – cracked intelligence to the point of being recursively self-improving and where o4 or o5 will be able to automate AI R&D and finish off the rest: Altman in November 2024 saying “I can see a path where the work we are doing just keeps compounding and the rate of progress we’ve made over the last three years continues for the next three or six or nine or whatever” turns into a week ago, “We are now confident we know how to build AGI as we have traditionally understood it…We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word. We love our current products, but we are here for the glorious future. With superintelligence, we can do anything else.” (Let DeepSeek chase their tail lights; they can’t get the big iron they need to compete once superintelligence research can pay for itself, quite literally.)

And then you get to have your cake and eat it too: the final AlphaGo/Zero model is not just superhuman but very cheap to run too. (Just searching out a few plies gets you to superhuman strength; even the forward pass alone is around pro human strength!)

If you look at the relevant scaling curves – may I yet again recommend reading Jones 2021?* – the reason for this becomes obvious. Inference-time search is a stimulant drug that juices your score immediately, but asymptotes hard. Quickly, you have to use a smarter model to improve the search itself, instead of doing more. (If simply searching could work so well, chess would’ve been solved back in the 1960s. It’s not hard to search more than the handful of positions a grandmaster human searches per second; the problem is searching the right positions rather than slamming into the exponential wall. If you want a text which reads ‘Hello World’, a bunch of monkeys on a typewriter may be cost-effective; if you want the full text of Hamlet before all the protons decay, you’d better start cloning Shakespeare.) Fortunately, you have the training data & model you need right at hand to create a smarter model…

Sam Altman (@sama, 2024-12-20) (emphasis added):

seemingly somewhat lost in the noise of today:

on many coding tasks, o3-mini will outperform o1 at a massive cost reduction!

i expect this trend to continue, but also that the ability to get marginally more performance for exponentially more money will be really strange

So, it is interesting that you can spend money to improve model performance in some outputs… but ‘you’ may be ‘the AI lab’, and you are simply be spending that money to improve the model itself, not just a one-off output for some mundane problem. Few users really need to spend exponentially more money to get marginally more performance, if that’s all you get; but if it’s simply part of the capex along the way to AGI or ASI…

This means that outsiders may never see the intermediate models (any more than Go players got to see random checkpoints from a third of the way through AlphaZero training). And to the extent that it is true that ‘deploying costs 1000x more than now’, that is a reason to not deploy at all. Why bother wasting that compute on serving external customers, when you can instead keep training, and distill that back in, and soon have a deployment cost of a superior model which is only 100x, and then 10x, and then 1x, and then <1x...?

Thus, the search/test-time paradigm may wind up looking surprisingly familiar, once all of the second-order effects and new workflows are taken into account. It might be a good time to refresh your memories about AlphaZero/MuZero training and deployment, and what computer Go/chess looked like afterwards, as a forerunner.

* Jones is more relevant than several of the references here like Snell, because Snell is assuming static, fixed models and looking at average-case performance, rather than hardest-case (even though the hardest problems are also going to be the most economically valuable – there is little value to solving easy problems that other models already solve, even if you can solve them cheaper). In such a scenario, it is not surprising that spamming small dumb cheap models to solve easy problems can outperform a frozen large model. But that is not relevant to the long-term dynamics where you are training new models. (This is a similar error to everyone was really enthusiastic about how ‘overtraining small models is compute-optimal’ – true only under the obviously false assumption that you cannot distill/quantify/prune large models. But you can.)

† What about Anthropic? If they’re doing the same thing, what are they saying? Not much, but Anthropic people have always had much better message discipline than OA so nothing new there, and have generally been more chary of pushing benchmark SOTAs than OA. It’s an interesting cultural difference, considering that Anthropic was founded by ex-OAers and regularly harvests from OA (but not vice-versa). Still, given their apparent severe compute shortages and the new Amazon datacenters coming online, it seems like you should expect something interesting from them in the next half-year. EDIT: Dario now says he is more confident than ever of superintelligence by 2027, smarter models will release soon, and Anthropic compute shortages will ease as they go >1m GPUs.

‡ Notably, today (2025-01-21), Google is boasting about a brand new ‘flash-thinking’ model which beats o1 and does similar inner-monologue reasoning. As I understand, and checking around and looking at the price per token ($0) in Google’s web interface and watching the speed (high) of flash-thinking on one of my usual benchmark tasks (Milton poetry), the ‘flash’ models are most comparable to Sonnet or GPT-mini models, ie. they are small models, and the ‘Pro’ models are the analogues to Opus or GPT-4/5. I can’t seem to find anything in the announcements saying if ‘flash-thinking’ is trained ‘from scratch’ for the RL o1 reasoning, or if it’s distilled, or anything about the existence of a ‘pro-thinking’ model which flash-thinking is distilled from… So my inference is that there is probably a large ‘pro-thinking’ model Google is not talking about, similar to Anthropic not talking about how Sonnet was trained.

An important update: “Stargate” (blog) is now officially public, confirming earlier $100b numbers and some loose talk about ‘up to $500b’ being spent. Noam Brown commentary:

@OpenAI excels at placing big bets on ambitious research directions driven by strong conviction.

This is on the scale of the Apollo Program and Manhattan Project when measured as a fraction of GDP. This kind of investment only happens when the science is carefully vetted and people believe it will succeed and be completely transformative. I agree it’s the right time.

…I don’t think t

… (read more)

2Thane Ruthenis

He gets onboarded only on January 28th, for clarity.

8gwern

My point there is that he was talking to the reasoning team pre-hiring (forget ‘onboarding’, who knows what that means), so they would be unable to tell him most things – including if they have a better reason than ‘faith in divine benevolence’ to think that ‘more RL does fix it’.

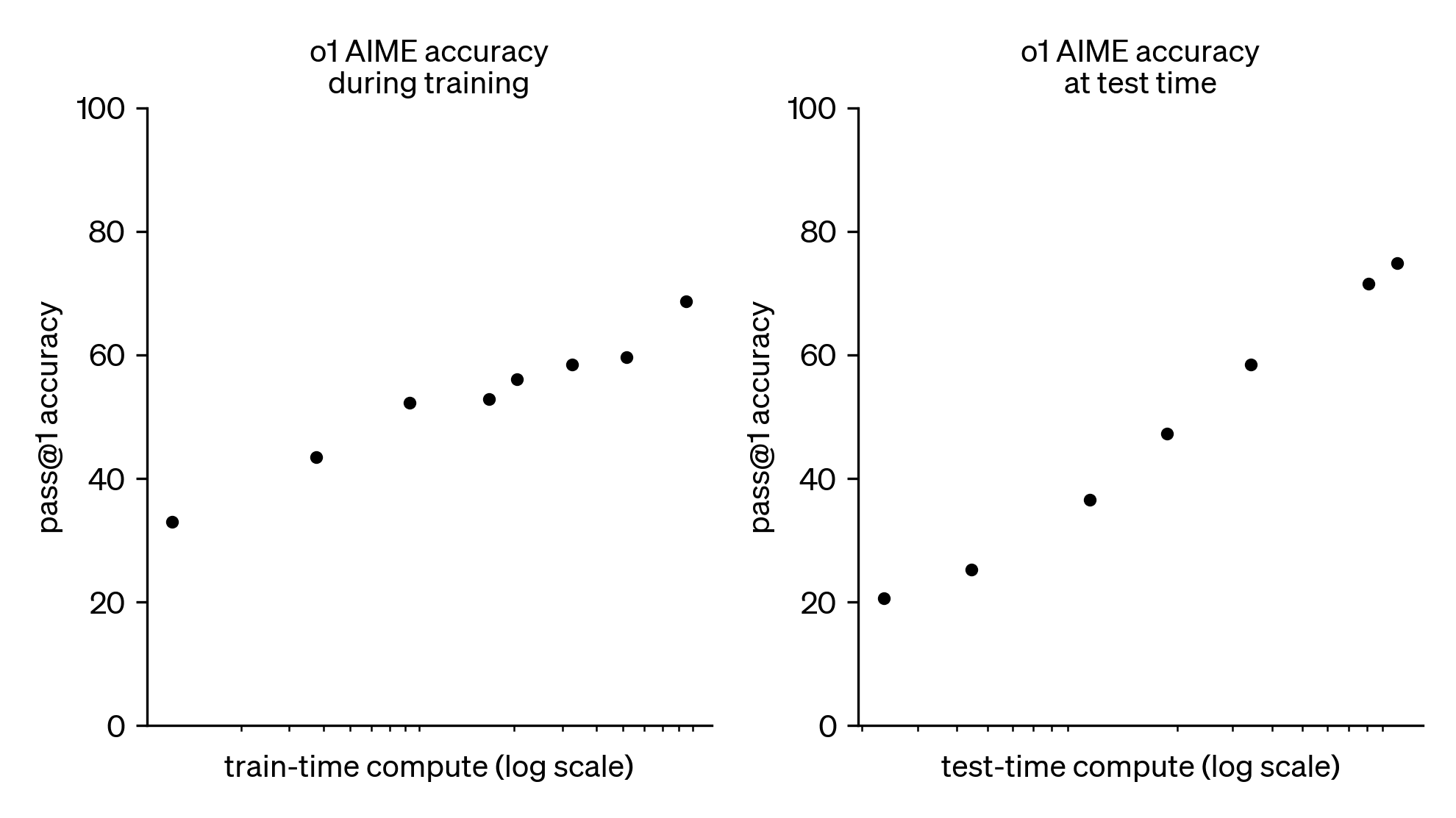

With the release of OpenAI’s o1 and o3 models, it seems likely that we are now contending with a new scaling paradigm: spending more compute on model inference at run-time reliably improves model performance. As shown below, o1’s AIME accuracy increases at a constant rate with the logarithm of test-time compute (OpenAI, 2024).

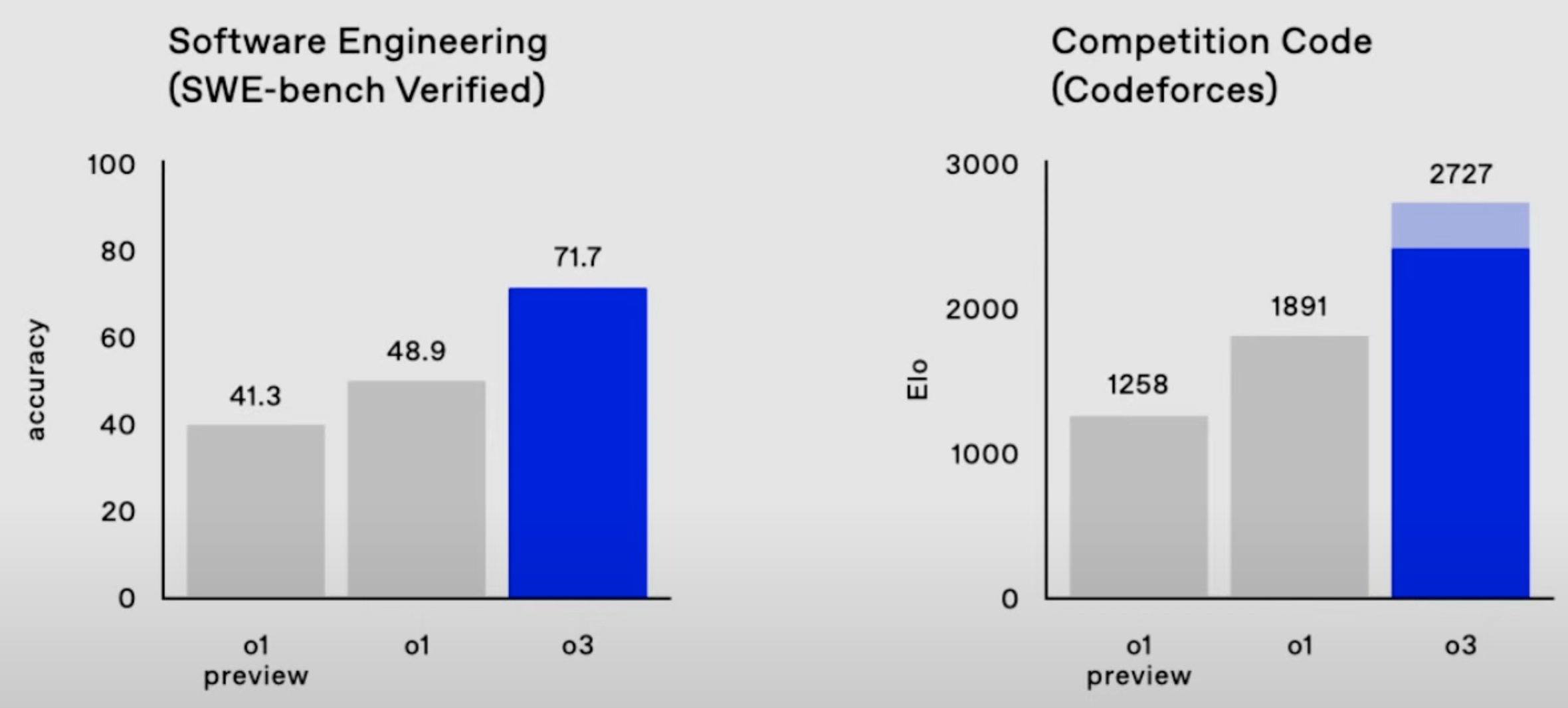

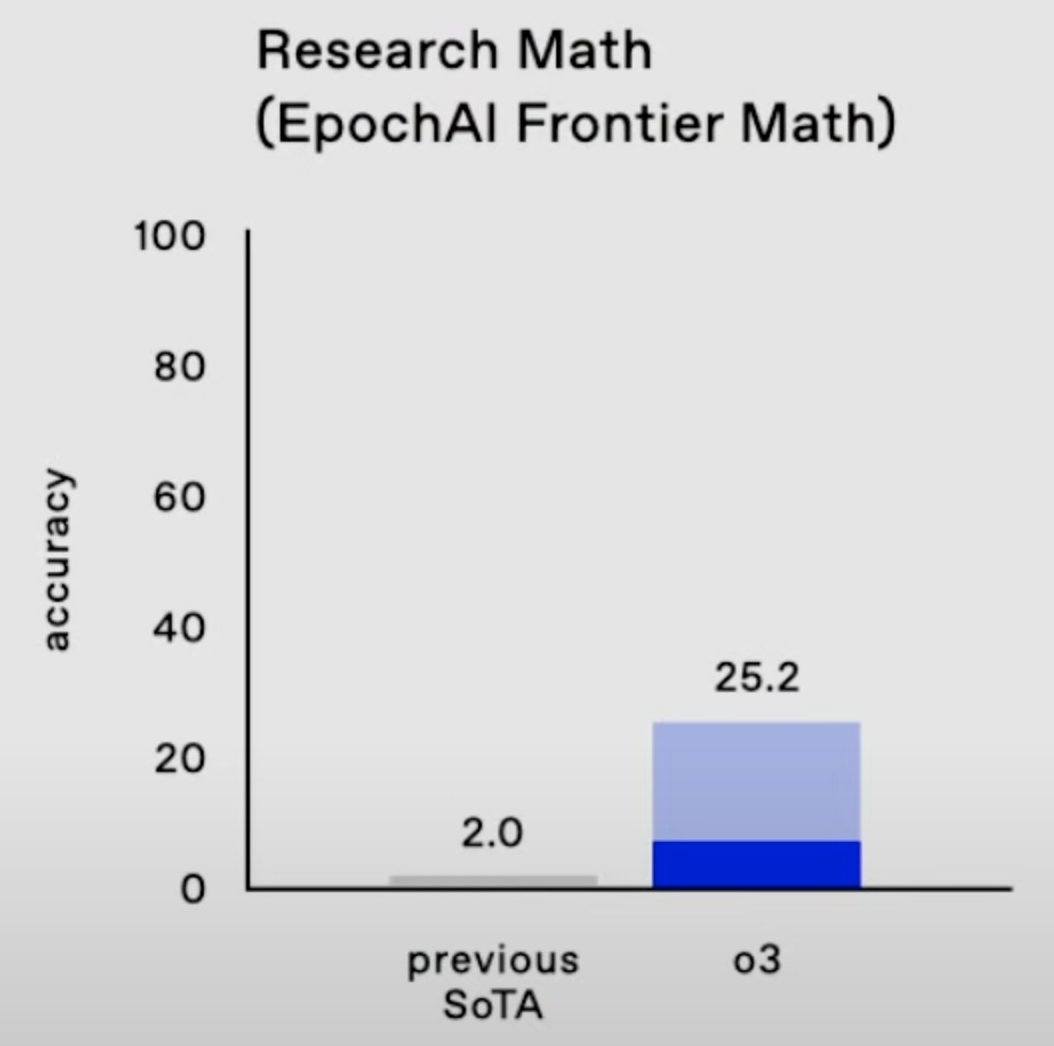

OpenAI’s o3 model continues this trend with record-breaking performance, scoring:

- 2727 on Codeforces, which makes it the 175th best competitive programmer on Earth;

- 25% on FrontierMath, where “each problem demands hours of work from expert mathematicians”;

- 88% on GPQA, where 70% represents PhD-level science knowledge;

- 88% on ARC-AGI, where the average Mechanical Turk human worker scores 75% on hard visual reasoning problems.

According to OpenAI, the bulk of model performance improvement in the o-series of models comes from increasing the length of chain-of-thought (and possibly further techniques like “tree-of-thought“) and improving the chain-of-thought (CoT) process with reinforcement learning. Running o3 at maximum performance is currently very expensive, with single ARC-AGI tasks costing ~$3k, but inference costs are falling by ~10x/year!

A recent analysis by Epoch AI indicated that frontier labs will probably spend similar resources on model training and inference.[1] Therefore, unless we are approaching hard limits on inference scaling, I would bet that frontier labs will continue to pour resources into optimizing model inference and costs will continue to fall. In general, I expect that the inference scaling paradigm is probably here to stay and will be a crucial consideration for AGI safety.

So what are the implications of an inference scaling paradigm for AI safety? In brief I think:

- AGI timelines are largely unchanged, but might be a year closer.

- There will probably be less of a deployment overhang for frontier models, as they will cost ~1000x more to deploy than expected, which reduces near-term risks from speed or collective superintelligence.

- Chain-of-thought oversight is probably more useful, conditional on banning non-language CoT, and this is great for AI safety.

- Smaller models that are more expensive to run are easier to steal, but harder to do anything with unless you are very wealthy, reducing the unilateralist’s curse.

- Scaling interpretability might be easier or harder; I’m not sure.

- Models might be subject to more RL, but this will be largely “process-based” and thus probably safer, conditional on banning non-language CoT.

- Export controls will probably have to adapt to handle specialized inference hardware.

AGI timelines

Interestingly, AGI timeline forecasts have not changed much with the advent of o1 and o3. Metaculus’ “Strong AGI” forecast seems to have dropped by 1 year to mid-2031 with the launch of o3; however, this forecast has fluctuated around 2031-2033 since March 2023. Manifold Market’s “AGI When?” market also dropped by 1 year, from 2030 to 2029, but this has been fluctuating lately too. It’s possible that these forecasting platforms were already somewhat pricing in the impacts of scaling inference compute, as chain-of-thought, even with RL augmentation, is not a new technology. Overall, I don’t have any better take than the forecasting platforms’ current predictions.

Deployment overhang

In Holden Karnofsky’s “AI Could Defeat All Of Us Combined” a plausible existential risk threat model is described, in which a swarm of human-level AIs outmanoeuvre humans due to AI’s faster cognitive speeds and improved coordination, rather than qualitative superintelligence capabilities. This scenario is predicated on the belief that “once the first human-level AI system is created, whoever created it could use the same computing power it took to create it in order to run several hundred million copies for about a year each.” If the first AGIs are as expensive to run as o3-high (costing ~$3k/task), this threat model seems much less plausible. I am consequently less concerned about a “deployment overhang,” where near-term models can be cheaply deployed to huge impact once expensively trained. This somewhat reduces my concern regarding “collective” or “speed” superintelligence, while slightly elevating my concern regarding “qualitative” superintelligence (see Superintelligence, Bostrom), at least for first-generation AGI systems.

Chain-of-thought oversight

If more of a model’s cognition is embedded in human-interpretable chain-of-thought compared to internal activations, this seems like a boon for AI safety via human supervision and scalable oversight! While CoT is not always a faithful or accurate description of a model’s reasoning, this can likely be improved. I’m also optimistic that LLM-assisted red-teamers will be able to prevent steganographic scheming or at least bound the complexity of plans that can be secretly implemented, given strong AI control measures.[2] From this perspective, the inference compute scaling paradigm seems great for AI safety, conditional on adequate CoT supervison.

Unfortunately, techniques like Meta’s Coconut (“chain of continuous thought”) might soon be applied to frontier models, enabling continuous reasoning without using language as an intermediary state. While these techniques might offer a performance advantage, I think they might amount to a tremendous mistake for AI safety. As Marius Hobbhahn says, “we’d be shooting ourselves in the foot” if we sacrifice legible CoT for marginal performance benefits. However, given that o1’s CoT is not visible to the user, it seems uncertain whether we will know if or when non-language CoT is deployed, unless this can be uncovered with adversarial attacks.

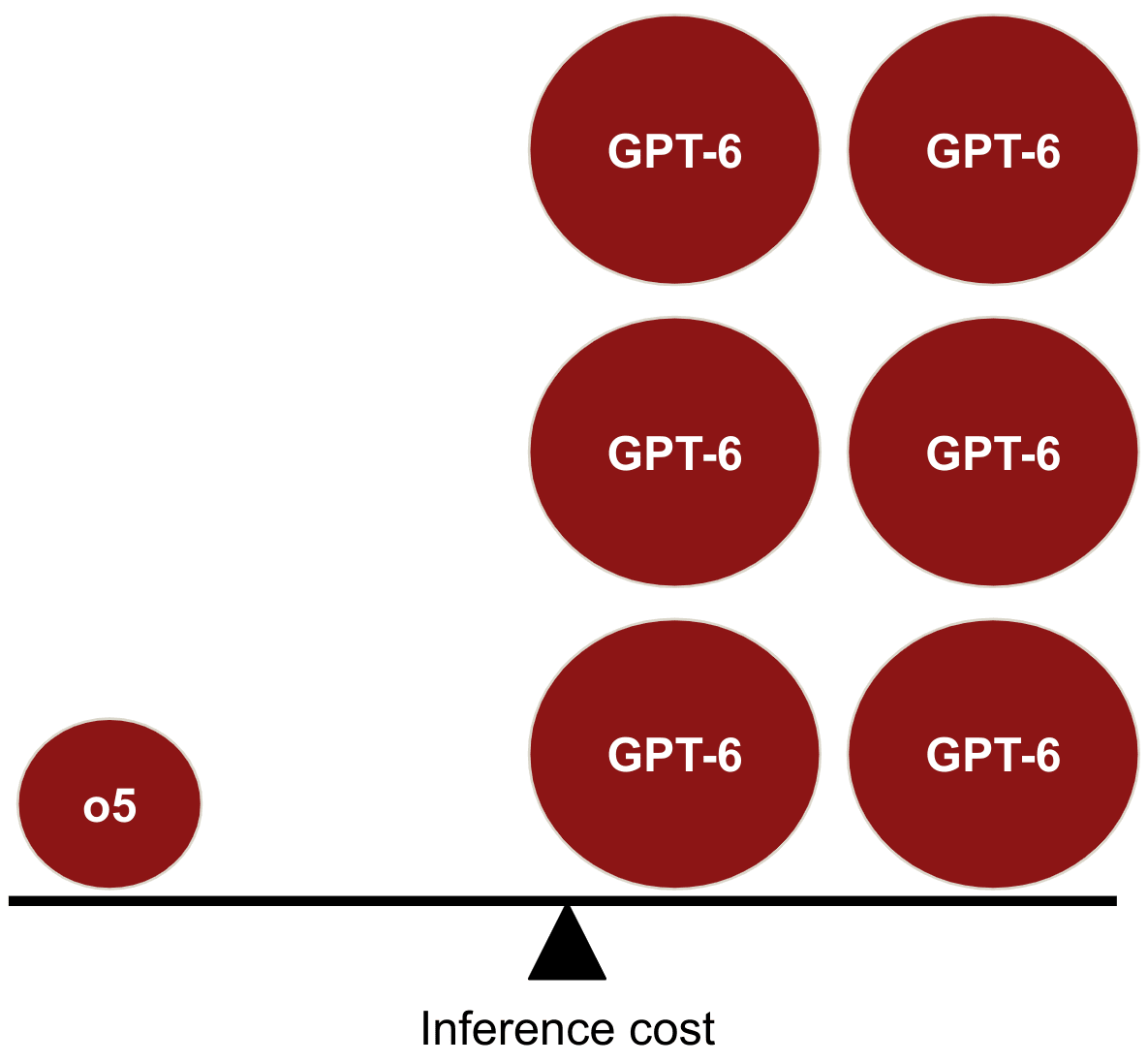

AI security

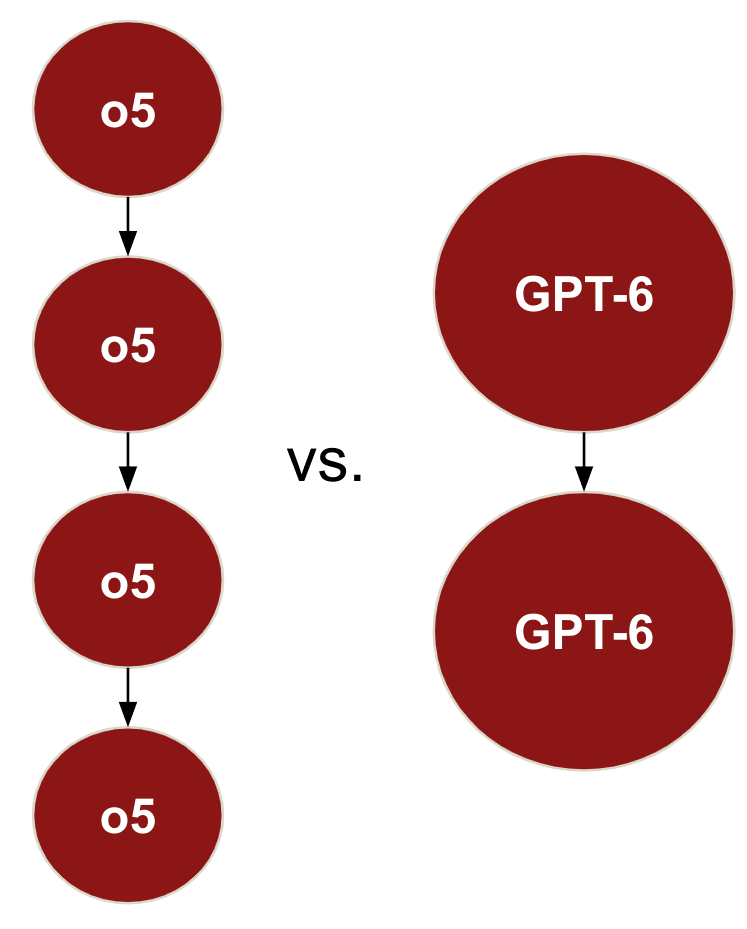

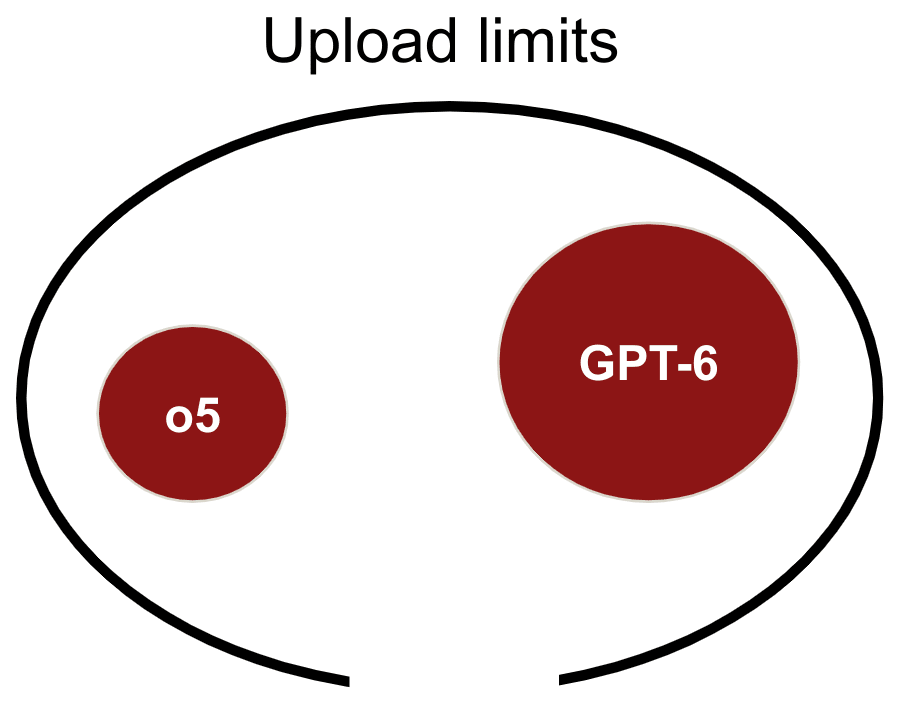

A proposed defence against nation state actors stealing frontier lab model weights is enforcing “upload limits” on datacenters where those weights are stored. If the first AGIs (e.g., o5) built in the inference scaling paradigm have smaller parameter count compared to the counterfactual equivalently performing model (e.g., GPT-6), then upload limits will be smaller and thus harder to enforce. In general, I expect smaller models to be easier to exfiltrate.

Conversely, if frontier models are very expensive to run, then this decreases the risk of threat actors stealing frontier model weights and cheaply deploying them. Terrorist groups who might steal a frontier model to execute an attack will find it hard to spend enough money or physical infrastructure to elicit much model output. Even if a frontier model is stolen by a nation state, the inference scaling paradigm might mean that the nation with the most chips and power to spend on model inference can outcompete the other. Overall, I think that the inference scaling paradigm decreases my concern regarding “unilateralist’s curse” scenarios, as I expect fewer actors to be capable of deploying o5 at maximum output relative to GPT-6.

Interpretability

The frontier models in an inference scaling paradigm (e.g., o5) are likely significantly smaller in parameter count than the counterfactual equivalently performing models (e.g., GPT-6), as the performance benefits of model scale can be substituted by increasing inference compute. Smaller models might allow for easier scaling of interpretability techniques such as “neuron labelling“. However, given that the hypothetical o5 and GPT-6 might contain a similar number of features, it’s possible that these would be more densely embedded in a smaller o5 and thus harder to extract. Smaller models trained to equivalent performance on the same dataset might exhibit more superposition, which might be more of a bottleneck to scaling interpretability than parameter count. At this point, I think the implications of inference scaling for AI interpretability are unclear and merit investigation.

More RL?

OpenAI has been open about using reinforcement learning (RL) on CoT to improve o-series model output. It is unclear to me whether o5 would be subject to more RL fine-tuning than GPT-6, but possible reasons to expect this include:

- Optimizing CoT via RL might provide cheaper performance improvements than optimizing base models via RL;

- RL feedback on long-chain CoT might allow for higher-quality signal than RL on shorter model outputs;

- OpenAI might be using some sort of “meta-level controller” to steer between different “tree-of-thought” branches in the o-series models, which would be cheaper to update with RL than an entire base model.

If the inference scaling paradigm incentivizes more RL fine-tuning this is somewhat concerning. Excessive optimization of AI systems via RL is the origin of many AI safety threat models, including “incentivizing power-seeking.” However, I expect RL on CoT to amount to “process-based supervision,” which seems inherently safer than “outcome-based supervision.” Overall, I think that this is weakly good news for AI safety, as I expect that AI labs would have used significant amounts of RL to enhance GPT-6 in the counterfactual world and process-based supervision is safer (conditional on the process being human-legible).

Edit: I no longer believe that RL on CoT straightforwardly amounts to process-based supervision (see Daniel Kokotajlo’s comment) and I think increased RL on CoT would be a negative update for AI safety.

Export controls

Different types of AI chips might be better at model inference than training, which might have implications for US semiconductor export controls. I expect AI labs to generally use specialized chips for training and inference to eke out more performance at lower cost. Any compute monitoring or on-chip security initiatives should consider how increased chip specialization impacts their efforts.

Overall, conditional on negligible effects on AGI timelines, I think that the advent of the inference compute scaling paradigm is weakly positive for AI safety. I welcome substantial feedback, as I am far from an expert on any of the topics I’ve covered and I wrote this fast to cover a gap in MATS’ curriculum.

- ^

I see this trade-off as analogous to the base-optimizer/mesa-optimizer trade-off discussed in “Risks from Learned Optimization“.

- ^

I think studying the performance bounds on steganography-assisted model scheming with unrestricted red-team access deserves much more attention.

I think this is missing a major piece of the self-play scaling paradigm, one which has been weirdly absent in most discussions of o1 as well: much of the point of a model like o1 is not to deploy it, but to generate training data for the next model. It was cool that o1’s accuracy scaled with the number of tokens it generated, but it was even cooler that it was successfully bootstrapping from 4o to o1-preview (which allowed o1-mini) to o1-pro to o3 to…

EDIT: given the absurd response to this comment, I’d point out that I do not think OA has achieved AGI and I don’t think they are about to achieve it either. I am trying to figure out what they think.

Every problem that an o1 solves is now a training data point for an o3 (eg. any o1 session which finally stumbles into the right answer can be refined to drop the dead ends and produce a clean transcript to train a more refined intuition). As Noam Brown likes to point out, the scaling laws imply that if you can search effectively with a NN for even a relatively short time, you can get performance on par with a model hundreds or thousands of times larger; and wouldn’t it be nice to be able to train on data generated by an advanced model from the future? Sounds like good training data to have!

This means that the scaling paradigm here may wind up looking a lot like the current train-time paradigm: lots of big datacenters laboring to train a final frontier model of the highest intelligence, which will usually be used in a low-search way and be turned into smaller cheaper models for the use-cases where low/no-search is still overkill. Inside those big datacenters, the workload may be almost entirely search-related (as the actual finetuning is so cheap and easy compared to the rollouts), but that doesn’t matter to everyone else; as before, what you see is basically, high-end GPUs & megawatts of electricity go in, you wait for 3-6 months, a smarter AI comes out.

I am actually mildly surprised OA has bothered to deploy o1-pro at all, instead of keeping it private and investing the compute into more bootstrapping of o3 training etc. (This is apparently what happened with Anthropic and Claude-3.6-opus – it didn’t ‘fail’, they just chose to keep it private and distill it down into a small cheap but strangely smart Claude-3.6-sonnet. And did you ever wonder what happened with the largest Gemini models or where those incredibly cheap, low latency, Flash models come from…?‡ Perhaps it just takes more patience than most people have.) EDIT: It’s not like it gets them much training data: all ‘business users’ (who I assume would be the majority of o1-pro use) is specifically exempted from training unless you opt-in, and it’s unclear to me if o1-pro sessions are trained on at all (it’s a ‘ChatGPT Pro’ level, and I can’t quickly find whether a professional plan is considered ‘business’). Further, the pricing of DeepSeek’s r1 series at something like a twentieth the cost of o1 shows how much room there is for cost-cutting and why you might not want to ship your biggest best model at all compared to distilling down to a small cheap model.

If you’re wondering why OAers† are suddenly weirdly, almost euphorically, optimistic on Twitter and elsewhere and making a lot of haha-only-serious jokes (EDIT: to be a little more precise, I’m thinking of Altman, roon, Brown, Sutskever, sever