Graceful Shutdown in Go: Practical Patterns by mkl95

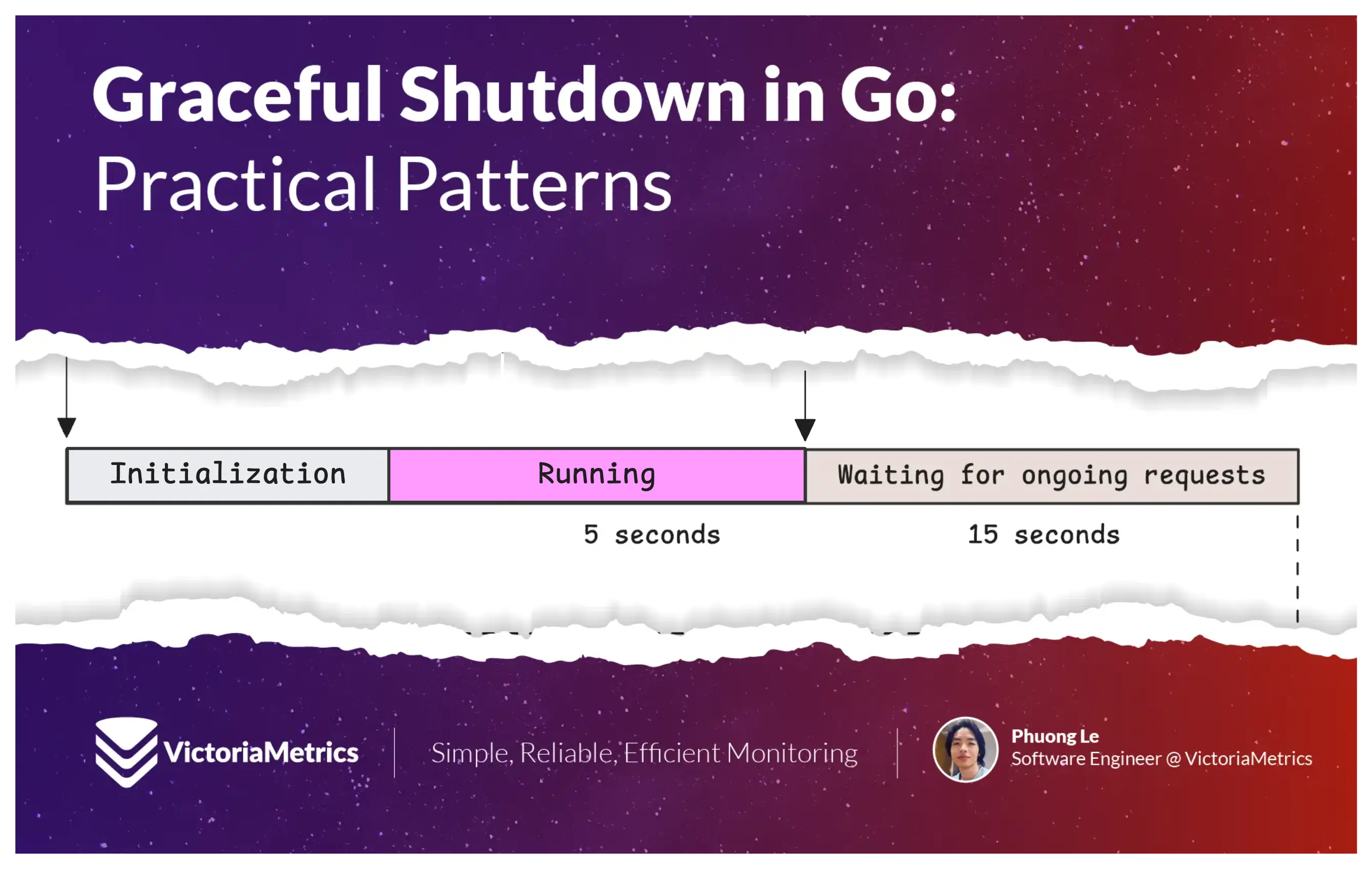

Graceful shutdown in any application generally satisfies three minimum conditions:

- Close the entry point by stopping new requests or messages from sources like HTTP, pub/sub systems, etc. However, keep outgoing connections to third-party services like databases or caches active.

- Wait for all ongoing requests to finish. If a request takes too long, respond with a graceful error.

- Release critical resources such as database connections, file locks, or network listeners. Do any final cleanup.

This article focuses on HTTP servers and containerized applications, but the core ideas apply to all types of applications.

1. Catching the Signal

#

Before we handle graceful shutdown, we first need to catch termination signals. These signals tell our application it’s time to exit and begin the shutdown process.

So, what are signals?

In Unix-like systems, signals are software interrupts. They notify a process that something has happened and it should take action. When a signal is sent, the operating system interrupts the normal flow of the process to deliver the notification.

Here are a few possible behaviors:

- Signal handler: A process can register a handler (a function) for a specific signal. This function runs when that signal is received.

- Default action: If no handler is registered, the process follows the default behavior for that signal. This might mean terminating, stopping, continuing, or ignoring the process.

- Unblockable signals: Some signals, like

SIGKILL(signal number 9), cannot be caught or ignored. They may terminate the process.

When your Go application starts, even before your main function runs, the Go runtime automatically registers signal handlers for many signals (SIGTERM, SIGQUIT, SIGILL, SIGTRAP, and others). However, for graceful shutdown, only three termination signals are typically important:

SIGTERM(Termination): A standard and polite way to ask a process to terminate. It does not force the process to stop. Kubernetes sends this signal when it wants your application to exit before it forcibly kills it.SIGINT(Interrupt): Sent when the user wants to stop a process from the terminal, usually by pressingCtrl+C.SIGHUP(Hang up): Originally used when a terminal disconnected. Now, it is often repurposed to signal an application to reload its configuration.

People mostly care about SIGTERM and SIGINT. SIGHUP is less used today for shutdown and more for reloading configs. You can find more about this in SIGHUP Signal for Configuration Reloads.

By default, when your application receives a SIGTERM, SIGINT, or SIGHUP, the Go runtime will terminate the application.

When your Go app gets a SIGTERM, the runtime first catches it using a built-in handler. It checks if a custom handler is registered. If not, the runtime disables its own handler temporarily, and sends the same signal (SIGTERM) to the application again. This time, the OS handles it using the default behavior, which is to terminate the process.

You can override this by registering your own signal handler using the os/signal package.

func main() {

signalChan := make(chan os.Signal, 1)

signal.Notify(signalChan, syscall.SIGINT, syscall.SIGTERM)

// Setup work here

<-sign

9 Comments

wbl

If a distribute system relies on clients gracefully exiting to work the system will eventually break badly.

evil-olive

another factor to consider is that if you have a typical Prometheus `/metrics` endpoint that gets scraped every N seconds, there's a period in between the "final" scrape and the actual process exit where any recorded metrics won't get propagated. this may give you a false impression about whether there are any errors occurring during the shutdown sequence.

it's also possible, if you're not careful, to lose the last few seconds of logs from when your service is shutting down. for example, if you write to a log file that is watched by a sidecar process such as Promtail or Vector, and on startup the service truncates and starts writing to that same path, you've got a race condition that can cause you to lose logs from the shutdown.

gchamonlive

This is one of the things I think Elixir is really smart in handling. I'm not very experienced in it, but it seems to me that having your processes designed around tiny VM processes that are meant to panic, quit and get respawned eliminates the need to have to intentionally create graceful shutdown routines, because this is already embedded in the application architecture.

deathanatos

> After updating the readiness probe to indicate the pod is no longer ready, wait a few seconds to give the system time to stop sending new requests.

> The exact wait time depends on your readiness probe configuration

A terminating pod is not ready by definition. The service will also mark the endpoint as terminating (and as not ready). This occurs on the transition into Terminating; you don't have to fail a readiness check to cause it.

(I don't know about the ordering of the SIGTERM & the various updates to the objects such as Pod.status or the endpoint slice; there might be a small window after SIGTERM where you could still get a connection, but it isn't the large "until we fail a readiness check" TFA implies.)

(And as someone who manages clusters, honestly that infintesimal window probably doesn't matter. Just stop accepting new connections, gracefully close existing ones, and terminate reasonably fast. But I feel like half of the apps I work with fall into either a bucket of "handle SIGTERM & take forever to terminate" or "fail to handle SIGTERM (and take forever to terminate)".

giancarlostoro

I had a coworker that would always say, if your program cannot cleanly handle ctrl c and a few other commands to close it, then its written poorly.

zdc1

I've been bitten by the surprising amount of time it takes for Kubernetes to update loadbalancer target IPs in some configurations. For me, 90% of the graceful shutdown battle was just ensuring that traffic was actually being drained before pod termination.

Adding a global preStop hook with a 15 second sleep did wonders for our HTTP 503 rates. This creates time between when the loadbalancer deregistration gets kicked off, and when SIGTERM is actually passed to the application, which in turn simplifies a lot of the application-side handling.

eberkund

I created a small library for handling graceful shutdowns in my projects: https://github.com/eberkund/graceful

I find that I typically have a few services that I need to start-up and sometimes they have different mechanisms for start-up and shutdown. Sometimes you need to instantiate an object first, sometimes you have a context you want to cancel, other times you have a "Stop" method to call.

I designed the library to help my consolidate this all in one place with a unified API.

cientifico

We've adopted Google Wire for some projects at JustWatch, and it's been a game changer. It's surprisingly under the radar, but it helped us eliminate messy shutdown logic in Kubernetes. Wire forces clean dependency injection, so now everything shuts down in order instead… well who knows :-D

https://go.dev/blog/wire

https://github.com/google/wire

liampulles

I tend to use a waitgroup plus context pattern. Any internal service which needs to wind down for shutdown gets a context which it can listen to in a goroutine to start shutting down, and a waitgroup to indicate that it is finished shutting down.

Then the main app goroutine can close the context when it wants to shutdown, and block on the waitgroup until everything is closed.