I gave a talk at DjangoCon US 2022 in San Diego last month about productivity on personal projects, titled “Massively increase your productivity on personal projects with comprehensive documentation and automated tests”.

The alternative title for the talk was Coping strategies for the serial project hoarder.

I’m maintaining a lot of different projects at the moment. Somewhat unintuitively, the way I’m handling this is by scaling down techniques that I’ve seen working for large engineering teams spread out across multiple continents.

The key trick is to ensure that every project has comprehensive documentation and automated tests. This scales my productivity horizontally, by freeing me up from needing to remember all of the details of all of the different projects I’m working on at the same time.

You can watch the talk on YouTube (25 minutes). Alternatively, I’ve included a detailed annotated version of the slides and notes below.

This was the title I originally submitted to the conference. But I realized a better title was probably…

Coping strategies for the serial project hoarder

This video is a neat representation of my approach to personal projects: I always have a few on the go, but I can never resist the temptation to add even more.

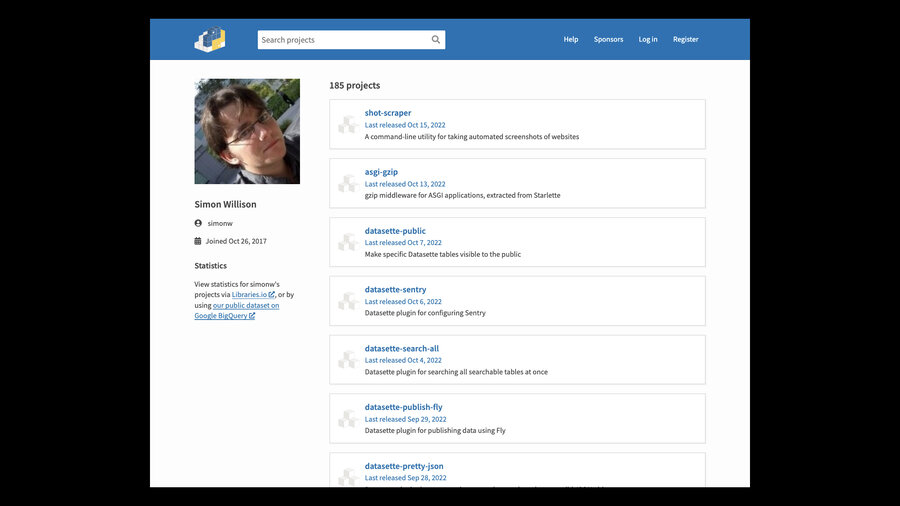

My PyPI profile (which is only five years old) lists 185 Python packages that I’ve released. Technically I’m actively maintaining all of them, in that if someone reports a bug I’ll push out a fix. Many of them receive new releases at least once a year.

Aside: I took this screenshot using shot-scraper with a little bit of extra JavaScript to hide a notification bar at the top of the page:

shot-scraper 'https://pypi.org/user/simonw/' --javascript " document.body.style.paddingTop = 0; document.querySelector( '#sticky-notifications' ).style.display = 'none'; " --height 1000

How can one individual maintain 185 projects?

Surprisingly, I’m using techniques that I’ve scaled down from working at a company with hundreds of engineers.

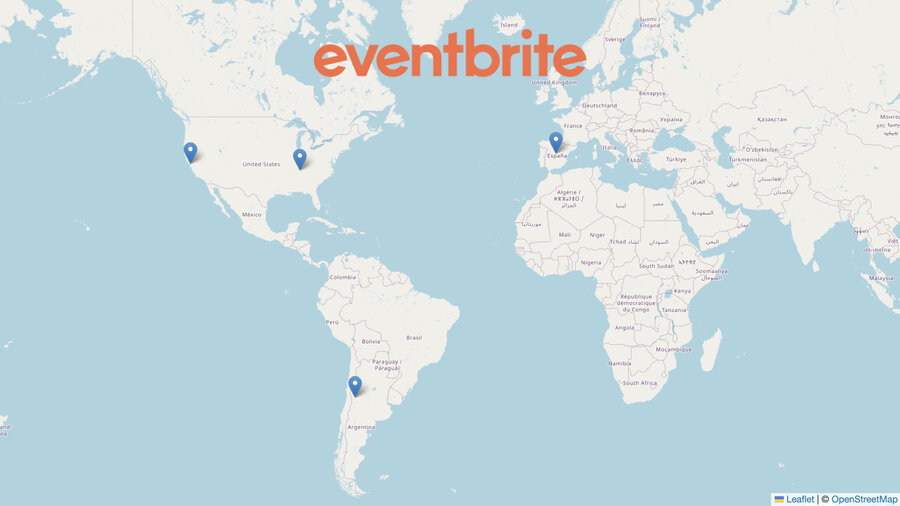

I spent seven years at Eventbrite, during which time the engineering team grew to span three different continents. We had major engineering centers in San Francisco, Nashville, Mendoza in Argentina and Madrid in Spain.

Consider timezones: engineers in Madrid and engineers in San Francisco had almost no overlap in their working hours. Good asynchronous communication was essential.

Over time, I noticed that the teams that were most effective at this scale were the teams that had a strong culture of documentation and automated testing.

As I started to work on my own array of smaller personal projects, I found that the same discipline that worked for large teams somehow sped me up, when intuitively I would have expected it to slow me down.

I wrote an extended description of this in The Perfect Commit.

I’ve started structuring the majority of my work in terms of what I think of as “the perfect commit”—a commit that combines implementation, tests, documentation and a link to an issue thread.

As software engineers, it’s important to note that our job generally isn’t to write new software: it’s to make changes to existing software.

As such, the commit is our unit of work. It’s worth us paying attention to how we cen make our commits as useful as possible.

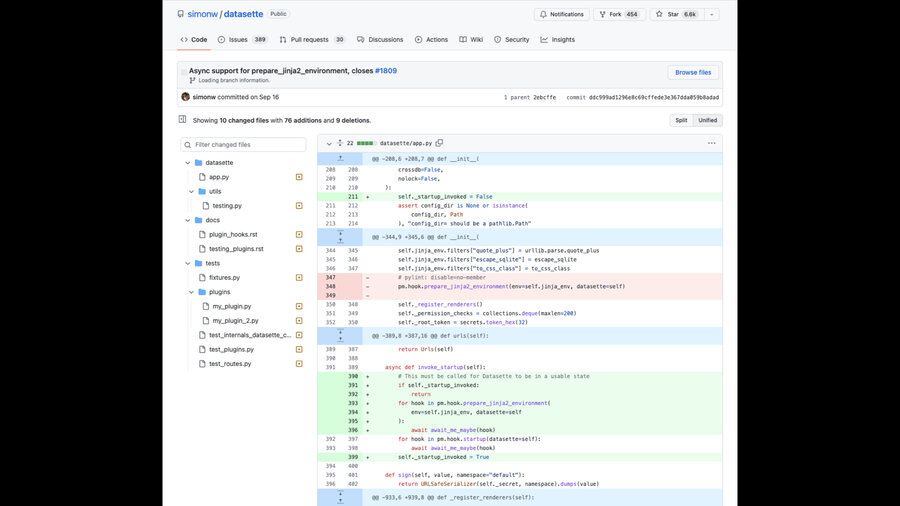

Here’s a recent example from one of my projects, Datasette.

It’s a single commit which bundles together the implementation, some related documentation improvements and the tests that show it works. And it links back to an issue thread from the commit message.

Let’s talk about each component in turn.

There’s not much to be said about the implementation: your commit should change thing!

It should only change one thing, but what that actually means varies on a case by case basis.

It should be a single change that can be documented, tested and explained independently of other changes.

(Being able to cleanly revert it is a useful property too.)

The goals of the tests that accompany a commit are to prove that the new implementation works.

If you apply the implementation the new tests should pass. If you revert it the tests should fail.

I often use git stash to try this out.

If you tell people they need to write tests for every single change they’ll often push back that this is too much of a burden, and will harm their productivity.

But I find that the incremental cost of adding a test to an existing test suite keeps getting lower over time.

The hard bit of testing is getting a testing framework setup in the first place—with a test runner, and fixtures, and objects under test and suchlike.

Once that’s in place, adding new tests becomes really easy.

So my personal rule is that every new project starts with a test. It doesn’t really matter what that test does—what matters is that you can run pytest to run the tests, and you have an obvious place to start building more of them.

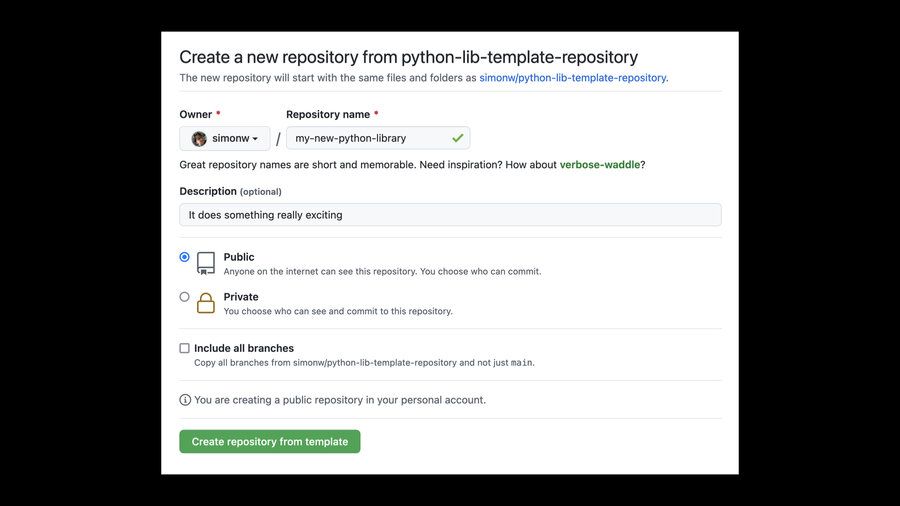

I maintain three cookiecutter templates to help with this, for the three kinds of projects I most frequently create:

- simonw/python-lib for Python libraries

- simonw/click-app for command line tools

- simonw/datasette-plugin for Datasette plugins

Each of these templates creates a project with a setup.py file, a README, a test suite and GitHub Actions workflows to run those tests and ship tagged releases to PyPI.

This is a hill that I will die on: your documentation must live in the same repository as your code!

You often see projects keep their documentation somewhere else, like in a wiki.

Inevitably it goes out of date. And my experience is that if your documentation is out of date people will lose trust in it, which means they’ll stop reading it and stop contributing to it.

The gold standard of documentation has to be that it’s reliably up to date with the code.

The only way you can do that is if the documentation and code are in the same repository.

This gives you versioned snapshots of the documentation that exactly match the code at that time.

More importantly, it means you can enforce it through code review. You can say in a PR “this is great, but don’t forget to update this paragraph on this page of the documentation to reflect the change you’re making”.

If you do this you can finally get documentation that people learn to trust over time.

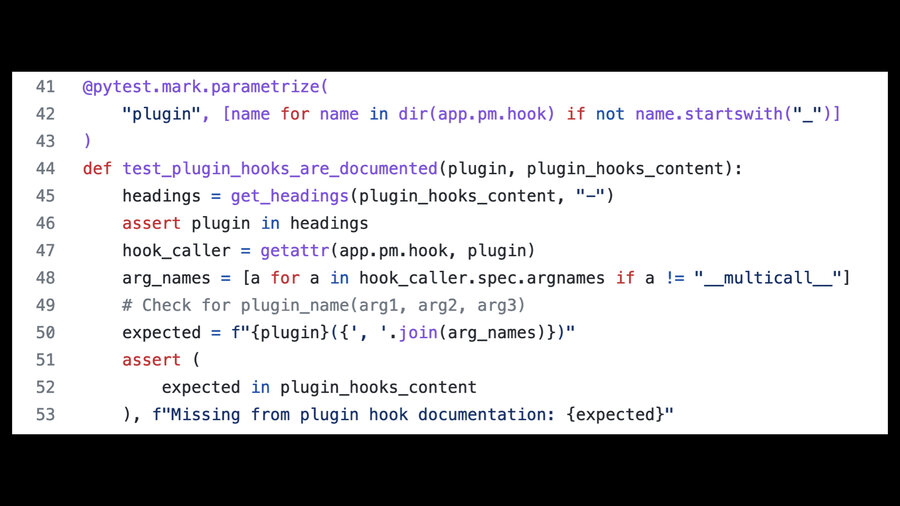

Another trick I like to use is something I call documentation unit tests.

The idea here is to use unit tests to enforce that concepts introspected from your code are at least mentioned in your documentation.

I wrote more about that in Documentation unit tests.

![Screenshot showing pytest running 26 passing tests, each with a name like test_plugin_hook_are_documented[filters_from_request]](https://static.simonwillison.net/static/2022/djangocon-productivity/productivity.016.jpeg)

Here’s an example. Datasette has a test that scans through each of the Datasette plugin hooks and checks that there is a heading for each one in the documentation.

The test itself is pretty simple: it uses pytest parametrization to look through every introspected plugin hook name, and for each one checks that it has a matching heading in the documentation.

The final component of my perfect commit is this: every co