November 25, 2022

If you’ve been on the internet at all lately, you’ve seen AI generated art and discussion of the impact of the tools. You’ve also probably seen a lot of hate for AI generated art. There’s a lot of drama going on, as art focused communities across the internet decide how to handle this. Does AI generated/assisted art need tagged as such? Should it be allowed at all? There’s the whole Deviant Art situation (ArsTechnica). It’s all sorta messy right now, so let’s talk about it.

AI to generate art has been around a while, it’s just that until recently, it looked pretty awful. Then, basically overnight, we got a collection of models – which are getting better rapidly still – that don’t just look okay, they look better than what a lot of budding artists are capable of. This, obviously, pisses a lot of people off. More on this in a bit.

Let’s start by talking about what the tools are capable of:

Text-to-image (txt2img)

#

This is the big headline feature.

Describe what you want, get an image out. Sometimes it’s unreasonably effective, sometimes it’s just unreasonable. More often than not, it’ll take a hundred or so generations of turning and refining your prompt to actually get what you want.

This and image-to-image generation (more in a moment) tend to be what scare artists the most. txt2img for the risk of putting artists out of a job, and img2img for art theft.

txt2img prompt crafting is a bit of an art in its own right, as getting the model to spit out what you want isn’t always trivial.

Many txt2img systems also allow specifying a negative prompt, which can be used to say certain things should not be in the picture.

Stable diffusion running Waifu Diffusion v1.3. Prompt: an Anime girl with long rainbow ((Iridescent hair)) and sharp facial features singing into a microphone and wearing headphones, art station, photograph, realistic, cyberpunk, gothic, eye shadow → img2img → inpaint. txt2img alone was not enough to get this result.

Image-to-image (img2img)

#

Text-to-image is great, but image-to-image is scary powerful too. If you’re going through pictures and you find something close to what you want, you can pluck it out and work with variations of it.

Stable diffusion running yiffy-e18.ckpt. Prompt: A anthro wolf furry in a jean vest with pins. Cyberpunk, punk rock.

The prior image through img2img

The prior image with the background through img2img again.

Note, the image being used for img2img does not need to be generated by the model. You can use an existing picture, such as a very poorly drawn landscape in 3 colors in MS paint, and let the model work off of that and get astounding results. img2img also lets you specify how much an image should change, from no change to a totally new image based on the prompt.

In painting

#

When photo editing sounds too tedious, just erase and generate!

Original (generated by Stable diffusion)

Area filled in black marked to be replaced

Result

Out painting

#

Need a bigger picture? Out painting can be used to extend an image beyond its original borders. Check out the timelapse DALL·E building up a massive version of Girl with a Pearl Earring by Johannes Vermeer at https://openai.com/blog/dall-e-introducing-outpainting/.

Making GIFS

#

No artist could reasonably do this. This is effectively a new art form.

There’s multiple takes on this. Some will navigate though different prompts on the same seed, some will change the seed on the same prompt (like the example here)

Upscaling

#

Upscaling tools have been around for a while now, but along with the rise of the new AI art models they have gotten much better. There’s a huge number of different algorithms for doing this upscaling, but the gist is they take a low resolution image and make it much higher resolution, adding in details. These images do not need to be originally generated by the AI tools. You can upload an existing picture. Here, I’ve generated an octopus picture with Stable Diffusion and then upscaled it.

You’ll notice the upscaled version has dramatically less noise in the image, which actually makes the octopus look slightly less natural.

Where the AI models struggle (for now):

#

Ref sheets & custom characters

#

So far as I can tell, you’re probably not going to be using any of the AI art tools to make a reference sheet for a character, nor be able to get it to generate images that consistently have the appearance of a custom character. Traits will change, details will be lost. There’s a lot of money to be made in character design, and at least for now, I don’t think these tools will be able to make these or use them to make original art.

This might be different if you have an extremely generic character such as “red head with big muscles” or “a white wolf with red eyes”, but otherwise, no way.

It also tends to suck at pieces with many characters. It likes one subject, maybe two, in the piece.

Counting

#

Getting a specific number of anything can be difficult, especially body parts (eyes, legs, etc.). This otter should have 3 eyes, but convincing the model to actually add those eyes is tough.

portrait of an otter with (((three eyes)))

Changing the text to a number and doing a few more generations, I eventually ran into this:

portrait of an otter with (((3 eyes)))

Body horror

#

If you look closely above, you’ll notice the Octopus image generated for the upscaling example has a tentacle that forms a loop. Now, in the AI’s defense, an octopus is a pretty hard prompt, still, getting extra or incorrect limbs is a pretty common occurrence. You’ll also occasionally find doubled up torsos, weird eyes and mouths, and unsettling numbers of fingers. Generally, there’s plenty of body horror to be found. The following examples are actually on the pretty tame side. You’ll often get some really, really creepy things out

With my experience using Stable Diffusion, It does particularly poorly if you attempt to generate images outside of the size the model was trained for (512×512, square images).

((Portrait)) of a ((single)) Anime waifu with long Iridescent hair and sharp facial features singing into a studio microphone and wearing studio headphones, in a recording booth – Using Waifu Diffusion

A couple getting married, wedding, alter photo, dslr, photograph

Text

#

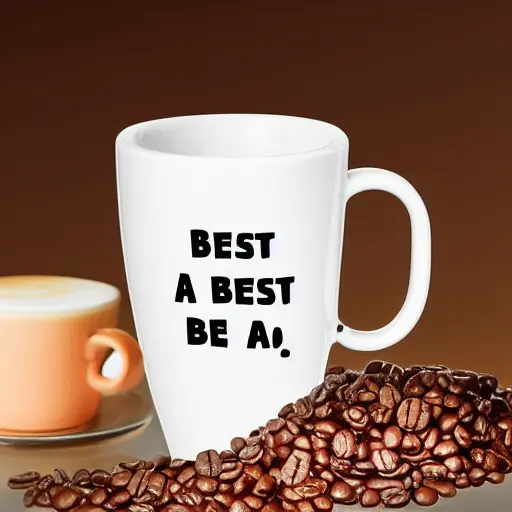

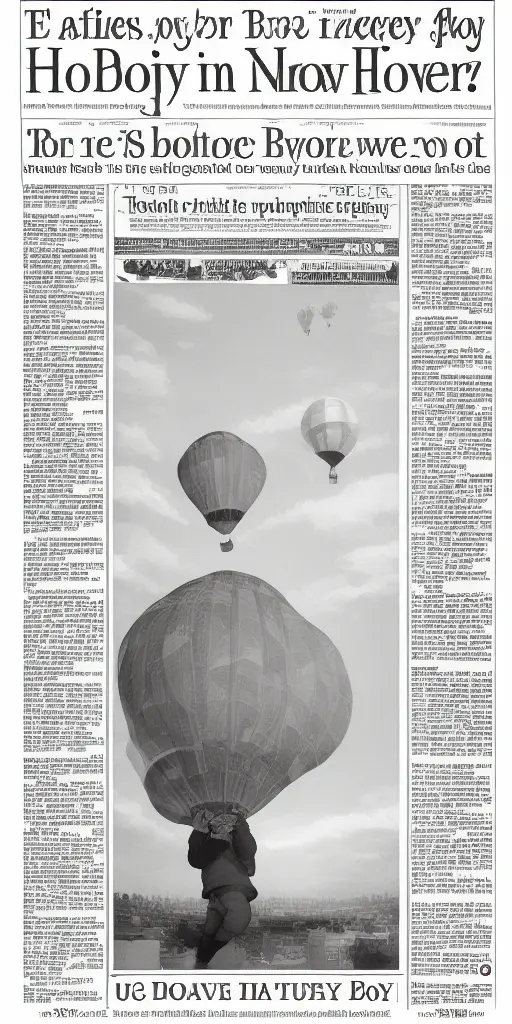

While these examples are a bit extreme as they explicitly ask for text, the point stands that generating anything with text on it (t-shirts, mugs, etc) – intentionally or not – tends to make people feel like they might be having a stroke.

A coffee cup that says "Worlds best dad!"

Newspaper headline story, "Boy in hot air balloon"

This may be changing soon, see Nvidia’s eDiffi is an impressive alternative to DALL-E 2 or Stable Diffusion (the Decoder). I don’t imagine it’ll be spitting out full comics any time soon, but I could be wrong.

3D art

#

3D art is getting there, and getting there rapidly. There are papers where it’s been done – see https://deepimagination.cc/Magic3D/ – but currently they’re all a bit… off? Regardless, it’s already to a point where I’d expect it to be hitting 3D artist’s workflows within a year.

So it’s not just generation?

#

Clearly. Even just generating su