When I was in highschool, I took the American Math Competition 12 exam, commonly known as AMC 12. The exam has 25 questions, multiple choice. The questions are challenging, but not impossible. Given enough time, I suspect most of the seniors at my fancy prep school would be able to do quite well on it. The problem is that you only had 75 minutes to complete the exam. 3 minutes per question. It was, obviously, not enough. The time limit did a good job of filtering for folks who really knew how to get to the right insight quickly. And rightly so — the AMC 12 exam is the qualifying exam to compete in the International Math Olympiad, among other things. Still, the time limit made the test artificially more difficult.

I never did particularly well at the AMC exams. I don’t, like, enjoy math very much — that’s why I went into AI! But also, I was frustrated by the time limits, and the artificial difficulty. In the real world, you might spend a lot of time thinking about a problem. And, in fact, it’s almost tautologically true that the really interesting problems worth solving take a lot of time to think about.

One thing that’s weird about a lot of AI models is that we expect them to behave like ‘general intelligence’ but do not give them time to actually reason through a problem. Many LLMs are running the AMC on ‘artificially hard’ mode. Except, they don’t have 3 minutes to respond, they have to respond instantly. Part of why that’s the case is because for a while, it was not even obvious what it meant for an LLM to ‘take more time’. If you give a human a test — like the AMC — they can tell you “hey I need another minute or two to solve this problem” and do the equivalent of spending more CPU cycles on it, or whatever. More generally, a human can decide how long they need to chew on a problem before committing to an answer. LLMs can’t really do that. The LLM has a fixed compute budget, determined by the size of the model and the context window (the number of tokens it can ‘look at’ at one time). Anything that requires more reasoning than that isn’t going to work.

OpenAI has been chewing on this problem for a while, and came up with a concept they call ‘inference time compute’.

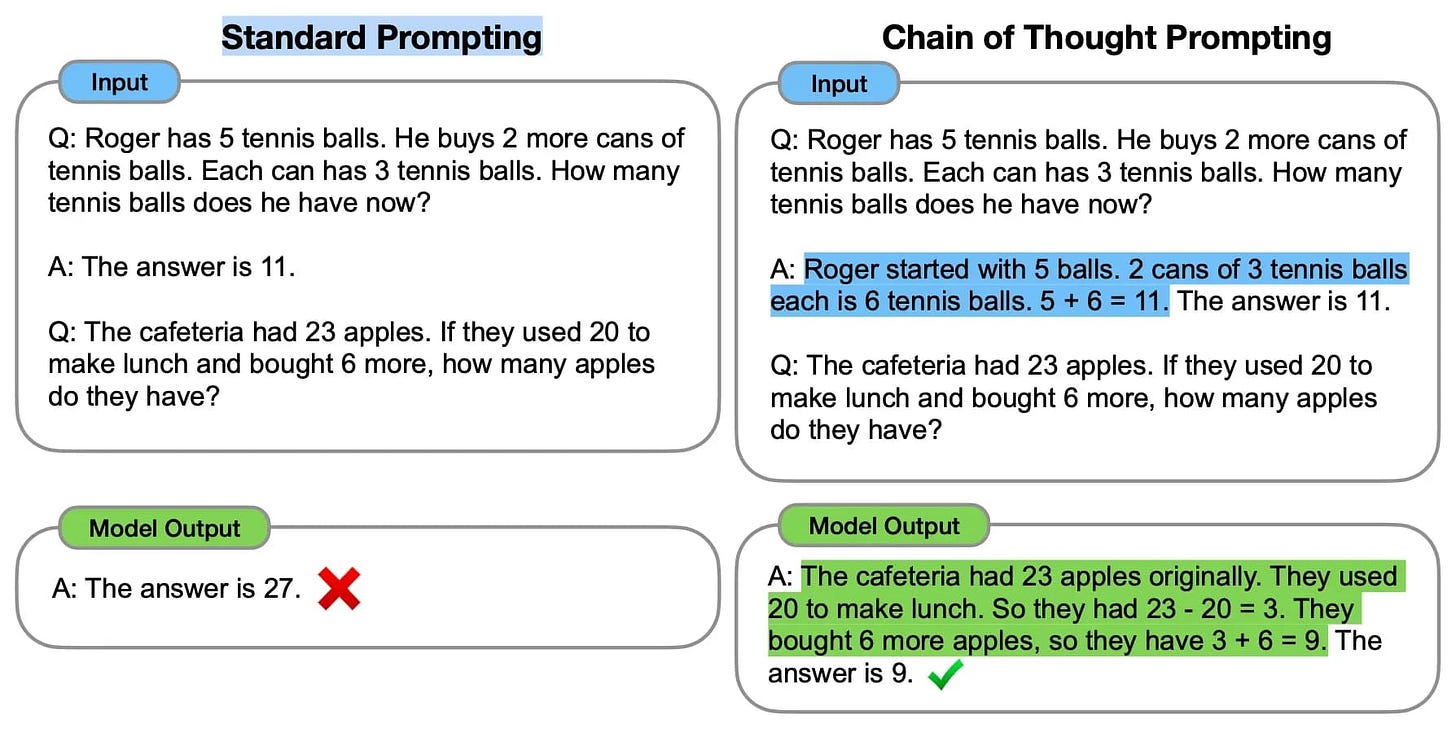

The earliest version of this was simply asking the LLM to show its work. Researchers discovered that if you asked an LLM to write out, step by step, how it solved a problem, accuracy went up.

It was theorized that this was kinda sorta equivalent to giving the LLM ‘more time’ to solve the problem. More advanced inference time compute solutions include things like:

-

Having an LLM output its intermediate thinking to a scratchpad, and having a different LLM pick up the intermediate output;

-

Giving the LLM special ‘reasoning tokens’ that allow it to go back and edit or change things it previously said;

-

Running multiple ‘reasoning threads’ in parallel and then choosing the one that is most likely to get to the best answer;

-

Running multiple ‘experts’ in parallel and then choosing the answer that the majority of them reach;

-

Training some reinforcement learning process on the reasoning output of an LLM to optimize for ‘better’ reasoning.

The core idea behind all of these things is to dynamically increase the amount of time and computer resources it takes for the LLM to answer the question, in exchange for accuracy. Harder questions get more compute, as determined by the LLM. This technology has been behind OpenAI’s O-series models, including the latest public o1 model and the very-impressive-but-still-private o3 model (discussed here).

In case you haven’t noticed, OpenAI and Google (and Anthropic and Meta) are in a tiny bit of an arms race right now. They are trying to capture the “LLM infrastructure layer”, and are working really hard to do so because there are really strong winner take all market dynamics. I’ve written about this in the past in when I discussed how the LLM market was similar to search:

Normally, when an upstart company wants to take a bite out of a big established market, they go low on price. This is especially true in a setting where the underlying product isn’t sticky. All things being equal, consumers will go for the best product along some c